Image Processing Algorithms

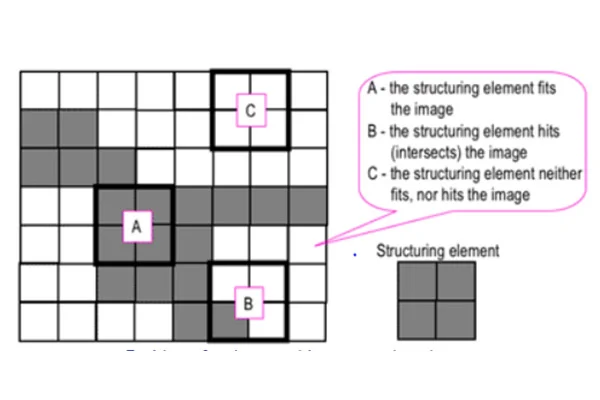

Table of content Types of Image Processing Algorithms Image processing is the process of enhancing and extracting useful information from images. Images are treated as two-dimensional signals and inputs to this process are a photograph or video section. The input is an image, and the output may be an improved image or characteristics/features associated with the same. There are many ways to process an image, but they all follow a similar pattern. First, an image’s red, green, and blue intensities are extracted. A new pixel is created from these intensities and inserted into a new, empty image at the same location as the original. In addition, gray scale pixels are created by averaging the intensities of all pixels. Afterward, they can be converted to black or white by using a threshold. It is essential to know that image processing algorithms have the most significant role in digital image processing. Developers have been using and implementing multiple image processing algorithms to solve various tasks, including digital image detection, image analysis, image reconstruction, image restoration, image enhancement, image data compression, spectral image estimation, and image estimation. Sometimes, the algorithms can be straight off the book or a more customized amalgamated version of several algorithm functions. In the case of full image capture, image processing algorithms are generally classified into: Types of Image Processing Algorithms There are different types of image processing algorithms. The techniques used to process images are image generation and image analysis. The basic idea behind this is converting an image from its original form into a digital image with a uniform layout. Some of the conventional image processing algorithms are as follows: Contrast Enhancement algorithm Dithering and half-toning algorithm Dithering and half-toning include the following: Elser difference-map algorithm It is a search algorithm used for general constraint satisfaction problems. It was used initially for X- Ray diffraction microscopy. Feature detection algorithm Feature detection algorithm consists of: Blind deconvolution algorithm: Like Richardson–Lucy deconvolution algorithm, it is an image de- blurring algorithm when the point spread function is unknown. Seam carving algorithm: The seam carving algorithm is a content-aware image resizing algorithm. Segmentation algorithm: This particular algorithm parts a digital image into two or more regions. It consists of: It is to note that apart from the algorithms mentioned above, industries also create customized algorithms to address their needs. They can be right from scratch or a combination of various algorithmic functions. It is safe to say that with the evolution of computer technology, image processing algorithms have provided sufficient opportunities for multiple researchers and developers to investigate, classify, characterize, and analyze various hordes of images.

Read MoreWhat is Digital Image Processing

Table of content Types of Image Processing Digital Image Processing and how it operates Uses of Digital Image Processing Previously we have learned what visual inspection is and how it helps in inspection checks and quality assurance of manufactured products. The task of vision-based inspection implements a specific technological aspect with the name of Digital Image Processing. Before getting into what it is, we need to understand the essential term Image Processing. Image processing is a technique to carry out a particular set of actions on an image to obtain an enhanced image or extract some valuable information. It is a sort of signal processing where the input is an image, and the output may be an improved image or characteristics/features associated with the same. The inputs to this process are either a photograph or video screenshot and these images are received as two-dimensional signals. Image processing involves three steps:Image acquisition: Acquisition can be made via image capturing tools like an optical scanner or with digital photos. Image enhancement: Once the image is acquired, it must be processed. Image enhancement includes cropping, enhancing, restoring, and removing glare or other elements. For example, image enhancement reduces signal distortion and clarifies fuzzy or poor-quality images. Image extraction: Extraction involves extracting individual image components, thus, producing a result where the output can be an altered image. The process is necessary when an image has a specific shape and requires a description or representation. The image is partitioned into separate areas and labeled with relevant information. It can also create a report based on the image analysis. Basic principles of image processing begin with the observation that electromagnetic waves are oriented in a horizontal plane. A single light pixel can be converted into a single image by combining those pixels. These pixels represent different regions of the image. This information helps the computer detect objects and determine the appropriate resolution. Some of the applications of image processing include video processing. Because videos are composed of a sequence of separate images, motion detection is a vital video processing component. Image processing is essential in many fields, from photography to satellite photographs. This technology improves subjective image quality and aims to make subsequent image recognition and analysis easier. Depending on the application, image processing can change image resolutions and aspect ratios and remove artifacts from a picture. Over the years, image processing has become one of the most rapidly growing technologies within engineering and even the computer science sector. Types of Image Processing Image processing includes the two types of methods:Analogue Image Processing: Generally, analogue image processing is used for hard copies like photographs and printouts. Image analysts use various facets of interpretation while using these visual techniques. Digital image processing: Digital image processing methods help in manipulating and analyzing digital images. In addition to improving and encoding images, digital image processing allows users to extract useful information and save them in various formats. This article primarily discusses digital image processing techniques and various phases. Digital Image Processing and how it operates Digital image processing requires computers to convert images into digital form using the digital conversion method and then process it. It is about subjecting various numerical depictions of images to a series of operations to obtain the desired result. This may include image compression, digital enhancement, or automated classification of targets. Digital images are comprised of pixels, which have discrete numeric representations of intensity. They are fed into the image processing system using spatial coordinates. They must be stored in a format compatible with digital computers to use digital images. The primary advantages of Digital Image Processing methods lie in their versatility, repeatability, and the preservation of original data. Unlike traditional analog cameras, digital cameras do not have pixels in the same color. The computer can recognize the differences between the colors by looking at their hue, saturation, and brightness. It then processes that data using a process called grayscaling. In a nutshell, grayscaling turn RGB pixels into one value. As a result, the amount of data in a pixel decreases, and the image becomes more compressed and easier to view. Cost targets often limit the technology that is used to process digital images. Thus, engineers must develop excellent and efficient algorithms while minimizing the number of resources consumed. While all digital image processing applications begin with illumination, it is crucial to understand that if the lighting is poor, the software will not be able to recover the lost information. That’s why it is best to use a professional for these applications. A good assembly language programmer should be able to handle high-performance digital image processing applications. Images are captured in a two-dimensional space, so a digital image processing system will be able to analyze that data. The system will then analyze it using different algorithms to generate output images. There are four basic steps in digital image processing. The first step is image acquisition, and the second step is enhancing and restoring the image. The final step is to transform the image into a color image. Once this process is complete, the image will be converted into a digital file. Thresholding is a widely-used image segmentation process. This method is often used to segment an image into a foreground and an object. To do this, a threshold value is computed above or below the pixels of the object. The threshold value is usually fixed, but in many cases, it can be best computed from the image statistics and neighbourhood operations. Thresholding produces a binary image that represents black and white only, with no shades of Gray in between. Digital image processing involves different methods, which are as follows:Image Editing: It means changing/altering digital images using graphic software tools.Image Restoration: It means processing a corrupt image and taking out a clean original image to regain the lost information.Independent Component Analysis: It separates various signals computationally into additive subcomponents.Anisotropic Diffusion: This method reduces image noise without having to remove essential portions of the image.Linear Filtering: Another digital image processing method is about processing time-varying input signals and generating output signals.Neural Networks: Neural networks

Read MoreImage Processing Algorithms based on usage

There are many ways to process an image, but they all follow a similar pattern. First, an image’s red, green, and blue intensities are extracted. A new pixel is created from these intensities and inserted into a new, empty image at the exact location as the original. In addition, grayscale pixels are created by averaging the intensities of all pixels. Afterward, they can be converted to black or white using a threshold. Edge Detection The first thing to note about Canny edge detectors is that they are not substitutes for the human eye. The Canny operator is used to detect edges in different image processing algorithms. This edge detector uses a threshold value of 80. Its original version performs double thresholding and edge tracking through hysteresis. During double thresholding, the edges are classified as strong and weak. Strong edges have a higher gradient value than the high threshold while weak edges fall between the two thresholds. The next phase of this algorithm involves searching for all connected components and selecting the final edge based on the presence of at least one strong edge pixel. Another improvement to the Canny edge detector is its architecture and computational efficiency. The distributed Canny edge detector algorithm proposes a new block-adaptive threshold selection procedure that exploits local image characteristics. The resulting image will be faster than the CPU implementation. The algorithm is more robust to block size changes, which allows it to support any size image. A new implementation of the distributed Canny edge detector has been developed for FPGA-based systems. Object localization The performance of different image processing algorithms for object localization depends on the accuracy of the recognition. While the HOG and SIFT methods use the same dataset, region-based algorithms improve the detection accuracy by more than twofold. The region-based algorithms use a reference marker to enhance matching and edge detection. They use the accurate coordinates in the image sequence to fine-tune the localization process. A geometry-based recognition method eliminates false targets, improves precision, and provides robustness. The ground test platform is already established and has improved object localization. It can now detect an object with one-tenth of pixel precision. This embedded platform can process an image sequence at 70 frames per second. These works were conducted to make the vision-based system more applicable in dynamic environments. However, the subpixel edge detection method is quite time-consuming and should only be used for fine operations. Among the popular object detection methods, the Histogram of Oriented Gradients (HOG) was the first algorithm developed. However, it is time-consuming and inefficient when applied to tight spaces. HOG is recommended to be the first method when working in general environments but is ineffective for tight spaces. However, it has decent accuracy for pedestrian detection due to its smooth edges. In addition to general applications, HOG is also suitable for object detection in video games. YOLO is a popular object detection algorithm and model. It was first introduced in 2016, followed by versions v2 and v3. However, it was not upgraded during 2018 and 2019. Three quick releases of YOLO followed in 2020. YOLO v4 and PP-YOLO were released. These versions can identify objects without using pre-processed images. The speed of these methods makes them popular. Segmentation There are various image processing algorithms available for segmentation. These algorithms use the features of the input image to divide the image into regions. A region is a group of pixels with similar properties. Usually, these algorithms use a seed point to start the segmentation process. The seed point may be a small area of the image or a larger region. Once this segmentation is complete, the algorithm adds or removes pixels around it until it merges with other regions. Discontinuous local features are used to detect edges, which define the boundaries of objects. They work well when the image has few edges and good contrast but are inefficient when the objects are too small. Homogeneous clustering is another method that divides pixels into clusters. It is best suited for small image datasets but may not work well if the clusters are irregular. Some methods use the histogram to segment objects. In other techniques, pixels may be grouped according to common characteristics, such as the intensity of color or shape. These methods are not limited to color and may use gradient magnitude to classify objects. Some of these algorithms also use local minima as a segmentation boundary. Moreover, they have based on image preprocessing techniques, and many of them use parallel edge detection. There are three main image segmentation algorithms: spatial domain differential operator, affine transform, and inverse-convolution. A popular implementation of image segmentation is edge-based. It focuses on the edges of different objects in an image, making it easier to find features of these objects. Since edges contain a large amount of information, this technique reduces the size of an image, making it easier to analyze. This method also identifies edges with greater accuracy. The results of both of these methods are highly comparable, although the latter is the more complex approach. Context navigation Current navigation systems use multi-sensor data to improve localization accuracy. Context navigation will enhance the accuracy of location estimates by anticipating the degradation of sensor signals. While context detection is the future of navigation, it is not yet widely adopted in the automotive industry. While most vision-based context indicators deal with place recognition and image segmentation, only a few are dedicated to context-aware navigation. For example, a vehicle in motion can provide information about its surroundings, such as signal quality. However, this information is not widely used in general navigation. Only a few works have focused on context-aware multi-sensor fusion. In addition to addressing these challenges, future research should identify and analyze the best algorithm for a particular situation. To detect environmental contexts, multi-sensor solutions are needed. GNSS-based solutions can only detect the context of one area, and the underlying data is not reliable enough to extract every context of interest. Other data types, such as vision-based context indicators, are needed for

Read More