Optimization Problems and Techniques

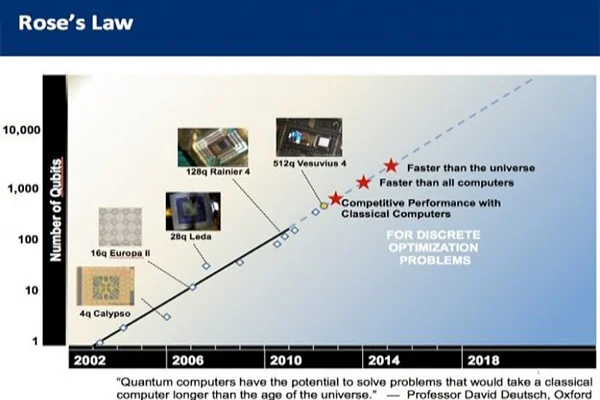

Table of content Optimization Problems Linear and Quadratic programming Types of Optimization Techniques When discussing the mathematics and computer science stream, optimization problems refer to finding the most appropriate solution out of all feasible solutions. The optimization problem can be defined as a computational situation where the objective is to find the best of all possible solutions. Using optimization to solve design problems provides unique insights into situations. The model can compare the current design to the best possible and includes information about limitations and implied costs of arbitrary rules and policy decisions. A well-designed optimization model can also aid in what-if analysis, revealing where improvements can be made or where trade-offs may need to be made. The application of optimization to engineering problems spans multiple disciplines. Optimization is divided into different categories. The first is a statistical technique, while the second is a probabilistic method. A mathematical algorithm is used to evaluate a set of data models and choose the best solution. The problem domain is specified by constraints, such as the range of possible values for a function. A function evaluation must be performed to find the optimum solution. Optimal solutions will have a minimal error, so the minimum error is zero. Optimization Problems There are different types of optimization problems. A few simple ones do not require formal optimization, such as problems with apparent answers or with no decision variables. But in most cases, a mathematical solution is necessary, and the goal is to achieve optimal results. Most problems require some form of optimization. The objective is to reduce a problem’s cost and minimize the risk. It can also be multi-objective and involve several decisions. There are three main elements to solve an optimization problem: an objective, variables, and constraints. Each variable can have different values, and the aim is to find the optimal value for each one. The purpose is the desired result or goal of the problem. Let us walk through the various optimization problem depending upon varying elements. Continuous Optimization versus Discrete Optimization Models with discrete variables are discrete optimization problems, while models with continuous variables are continuous optimization problems. Constant optimization problems are easier to solve than discrete optimization problems. A discrete optimization problem aims to look for an object such as an integer, permutation, or graph from a countable set. However, with improvements in algorithms coupled with advancements in computing technology, there has been an increase in the size and complexity of discrete optimization problems that can be solved efficiently. It is to note that Continuous optimization algorithms are essential in discrete optimization because many discrete optimization algorithms generate a series of continuous sub-problems. Unconstrained Optimization versus Constrained Optimization An essential distinction between optimization problems is when problems have constraints on the variables and problems in which there are constraints on the variables. Unconstrained optimization problems arise primarily in many practical applications and the reformulation of constrained optimization problems. Constrained optimization problems appear in applications with explicit constraints on the variables. Constrained optimization problems are further divided according to the nature of the limitations, such as linear, nonlinear, convex, and functional smoothness, such as differentiable or non-differentiable. None, One, or Many Objectives Although most optimization problems have a single objective function, there have been peculiar cases when optimization problems have either — no objective function or multiple objective functions. Multi-objective optimization problems arise in engineering, economics, and logistics streams. Often, problems with multiple objectives are reformulated as single-objective problems. Deterministic Optimization versus Stochastic Optimization Deterministic optimization is where the data for the given problem is known accurately. But sometimes, the data cannot be known precisely for various reasons. A simple measurement error can be a reason for that. Another reason is that some data describe information about the future, hence cannot be known with certainty. In optimization under uncertainty, it is called stochastic optimization when the uncertainty is incorporated into the model. Optimization problems are classified into two types: Linear Programming: In linear programming (LP) problems, the objective and all of the constraints are linear functions of the decision variables. As all linear functions are convex, solving linear programming problems is innately easier than non- linear problems. Quadratic Programming: In the quadratic programming (QP) problem, the objective is a quadratic function of the decision variables, and the constraints are all linear functions of the variables. A widely used Quadratic Programming problem is the Markowitz mean-variance portfolio optimization problem. The objective is the portfolio variance, and the linear constraints dictate a lower bound for portfolio return. Linear and Quadratic programming We all abide by optimization since it is a way of life. We all want to make the most of our available time and make it productive. Optimization finds its use from time usage to solving supply chain problems. Previously we have learned that optimization refers to finding the best possible solutions out of all feasible solutions. Optimization can be further divided into Linear programming and Quadratic programming. Let us take a walkthrough. Linear Programming Linear programming is a simple technique to find the best outcome or optimum points from complex relationships depicted through linear relationships. The actual relationships could be much more complicated, but they can be simplified into linear relationships.Linear programming is a widely used in optimization for several reasons, which can be: Quadratic Programming Quadratic programming is the method of solving a particular optimization problem, where it optimizes (minimizes or maximizes) a quadratic objective function subject to one or more linear constraints. Sometimes, quadratic programming can be referred to as nonlinear programming. The objective function in QP may carry bilinear or up to second-order polynomial terms. The constraints are usually linear and can be both equalities and inequalities. Quadratic Programming is widely used in optimization. Reasons being: Types of Optimization Techniques There are many types of mathematical and computational optimization techniques. An essential step in the optimization technique is to categorize the optimization model since the algorithms used for solving optimization problems are customized as per the nature of the problem. Integer programming, for example, is a form of mathematical programming. This technique can be traced back to Archimedes, who first described the problem of determining the composition of a herd of cattle. Advances in computational codes and theoretical research

Read MoreWhat is Digital Image Processing

Table of content Types of Image Processing Digital Image Processing and how it operates Uses of Digital Image Processing Previously we have learned what visual inspection is and how it helps in inspection checks and quality assurance of manufactured products. The task of vision-based inspection implements a specific technological aspect with the name of Digital Image Processing. Before getting into what it is, we need to understand the essential term Image Processing. Image processing is a technique to carry out a particular set of actions on an image to obtain an enhanced image or extract some valuable information. It is a sort of signal processing where the input is an image, and the output may be an improved image or characteristics/features associated with the same. The inputs to this process are either a photograph or video screenshot and these images are received as two-dimensional signals. Image processing involves three steps:Image acquisition: Acquisition can be made via image capturing tools like an optical scanner or with digital photos. Image enhancement: Once the image is acquired, it must be processed. Image enhancement includes cropping, enhancing, restoring, and removing glare or other elements. For example, image enhancement reduces signal distortion and clarifies fuzzy or poor-quality images. Image extraction: Extraction involves extracting individual image components, thus, producing a result where the output can be an altered image. The process is necessary when an image has a specific shape and requires a description or representation. The image is partitioned into separate areas and labeled with relevant information. It can also create a report based on the image analysis. Basic principles of image processing begin with the observation that electromagnetic waves are oriented in a horizontal plane. A single light pixel can be converted into a single image by combining those pixels. These pixels represent different regions of the image. This information helps the computer detect objects and determine the appropriate resolution. Some of the applications of image processing include video processing. Because videos are composed of a sequence of separate images, motion detection is a vital video processing component. Image processing is essential in many fields, from photography to satellite photographs. This technology improves subjective image quality and aims to make subsequent image recognition and analysis easier. Depending on the application, image processing can change image resolutions and aspect ratios and remove artifacts from a picture. Over the years, image processing has become one of the most rapidly growing technologies within engineering and even the computer science sector. Types of Image Processing Image processing includes the two types of methods:Analogue Image Processing: Generally, analogue image processing is used for hard copies like photographs and printouts. Image analysts use various facets of interpretation while using these visual techniques. Digital image processing: Digital image processing methods help in manipulating and analyzing digital images. In addition to improving and encoding images, digital image processing allows users to extract useful information and save them in various formats. This article primarily discusses digital image processing techniques and various phases. Digital Image Processing and how it operates Digital image processing requires computers to convert images into digital form using the digital conversion method and then process it. It is about subjecting various numerical depictions of images to a series of operations to obtain the desired result. This may include image compression, digital enhancement, or automated classification of targets. Digital images are comprised of pixels, which have discrete numeric representations of intensity. They are fed into the image processing system using spatial coordinates. They must be stored in a format compatible with digital computers to use digital images. The primary advantages of Digital Image Processing methods lie in their versatility, repeatability, and the preservation of original data. Unlike traditional analog cameras, digital cameras do not have pixels in the same color. The computer can recognize the differences between the colors by looking at their hue, saturation, and brightness. It then processes that data using a process called grayscaling. In a nutshell, grayscaling turn RGB pixels into one value. As a result, the amount of data in a pixel decreases, and the image becomes more compressed and easier to view. Cost targets often limit the technology that is used to process digital images. Thus, engineers must develop excellent and efficient algorithms while minimizing the number of resources consumed. While all digital image processing applications begin with illumination, it is crucial to understand that if the lighting is poor, the software will not be able to recover the lost information. That’s why it is best to use a professional for these applications. A good assembly language programmer should be able to handle high-performance digital image processing applications. Images are captured in a two-dimensional space, so a digital image processing system will be able to analyze that data. The system will then analyze it using different algorithms to generate output images. There are four basic steps in digital image processing. The first step is image acquisition, and the second step is enhancing and restoring the image. The final step is to transform the image into a color image. Once this process is complete, the image will be converted into a digital file. Thresholding is a widely-used image segmentation process. This method is often used to segment an image into a foreground and an object. To do this, a threshold value is computed above or below the pixels of the object. The threshold value is usually fixed, but in many cases, it can be best computed from the image statistics and neighbourhood operations. Thresholding produces a binary image that represents black and white only, with no shades of Gray in between. Digital image processing involves different methods, which are as follows:Image Editing: It means changing/altering digital images using graphic software tools.Image Restoration: It means processing a corrupt image and taking out a clean original image to regain the lost information.Independent Component Analysis: It separates various signals computationally into additive subcomponents.Anisotropic Diffusion: This method reduces image noise without having to remove essential portions of the image.Linear Filtering: Another digital image processing method is about processing time-varying input signals and generating output signals.Neural Networks: Neural networks

Read MoreTypes of Geometric Modeling

Table of Content Solid Modeling Surface Modeling Wireframe Modeling The previous edition gave a brief introduction to Geometric Modeling and its features. Geometric modeling is the mathematical representation of an object’s geometry. It incorporates the use of curves to create models. It can be viewed either in 2D or 3D perspective. The general design is applied to different geometric structures, including sets and graphs. This article introduces geometric models which represent geometric objects and their properties mathematically. They can be viewed as collections of squares of different colors. On the other hand, geometric shapes can be considered mathematical equations. Geometric models can represent data in context, such as a digital image. Regardless of the modeling approach, all students should understand how CAD software works. A basic understanding of geometric modeling is essential for any aspiring designer. In contrast, button- push robots often neglect this aspect of CAD training. However, preparing students with the fundamental knowledge of geometric modeling is also critical for their competitive edge. This edition details the primary types of geometric modeling. Geometric modeling can be classified into the following: Solid Modeling Also known as volume modeling, this is the most widely used method, providing a complete description of solid modeling. Solid modeling defines an object by its nodes, edges, and surfaces; therefore, it gives a perfect and explicit mathematical representation of a precisely enclosed and filled volume. Solid modeling requires topology rules to guarantee that all covers are stitched together correctly. This geometry modeling procedure is based upon the “Half-Space” concept. A solid model begins with a solid, which is then stitched together using topology rules. Solid modeling has many benefits, including improved visualization and functional automation. CAD software can quickly calculate the actual geometry of complex shapes. A cube, for example, has six faces, a radius of 8.4 mm, and many radii. It has many angles and a shallow pyramid on each face. By applying solid modeling concepts, CAD programs can quickly calculate these attributes for a given cube. Similarly, a cube with rounded edges has many radii and faces. There are two prevalent ways of representing solid models:Constructive solid geometry: Constructive solid geometry combines primary solid objects (prism, cylinder, cone, sphere, etc.). These shapes are either added or deleted to form the final solid shape.Boundary representation: In boundary representation, an object’s definition is determined by its spatial boundaries. It describes the points, edges, and surfaces of a volume and issues the command to rotate and sweep bind facets into a third-dimensional solid. The union of these surfaces enables the formation of a surface that explicitly encloses a volume. Solid Modeling is the most widely used geometric modeling in three dimensions, and it serves the following purpose: Different solid modeling techniques are as follows: Surface Modeling Surface modeling represents the solid appearing object. Although it is a more complicated representation method than wireframe modeling, it is not as refined as solid modeling. Although surface and solid models look identical, the former cannot be sliced open the way solid models can be. This model makes use of B-splines and Bezier for controlling curves. When polygons or NURBS represent surfaces, the computer can convert the commands into mathematical models. These models are saved in files and can be opened for editing and analysis at any time. The process of importing models from other programs is often complex and problematic, resulting in ambiguous results. In contrast, surface modeling allows for precise changes to difficult surfaces. A typical surface modeling process involves the following steps: Surface modeling is used to: Geometric surface modeling has proven to be extremely useful in computer graphics. Multiresolution modeling involves the generation of various surfaces at different levels of detail and accuracy. The resulting surfaces are then applied to various problems, including general surface estimation from structured and unstructured data. One such application is a subdivision surface, which begins from a simple primitive and gradually adds tools and details. Wireframe Modeling A wireframe model is composed of points, lines, curves, and surfaces connected by point coordinates. Because the model is not solid, it is difficult to visualize, but it helps generate simple geometric shapes. The resulting model contains information on every object’s point, edge, face, and vertices. This model is beneficial for creating an orthographic isometric or perspective view. The lines within a wireframe connect to create polygons, such as triangles and rectangles, representing three-dimensional shapes when bound together. The outcome may range from a cube to a complex three-dimensional scene with people and objects. The number of polygons within a model indicates how detailed the wireframe 3D model is. Wireframe modeling helps in matching a 3D drawing model to its reference. Planar objects can be moved to a 3D location once they have been created. It allows the creator to match the vertex points to align with the desired reference and see the reference through the model. Although Wireframe modeling is a quick and easy way to demonstrate concepts, creating a fully detailed, precisely constructed model for an idea can be highly time-consuming. If it does not match what was visualized for the project, all that time and effort is wasted. In wireframe modeling, one can skip the detailed work and present a very skeletal framework that is simple to create and apprehensible to others. Using a wireframe model as a reference geometry will help you create a solid or surface model that follows a definite shape. In this way, you can easily visualize your model and select objects. The wireframe model is decomposed into a series of simple wireframes and the desired face topology.

Read More6 factors to consider while selecting any Algorithm Library

Processing geometric inputs play a crucial role in the product development cycle. Ever since the introduction of complex algorithm libraries, the NPD landscape has changed drastically, and for good. Typically, a well suitable library streamlines the work process by executing complicated tasks using a wide array of functions. An algorithm library basically works on the principle where it is fed with specific instructions to execute in a way with functionalities customised with it. For example, in manufacturing industry; there is a term known as point cloud library and it holds its expertise in converting millions of point cloud data into mesh models. There are particular algorithms to perform numerous perplexing tasks. There are platforms that use specific and unique functionalities and programming to get the job done. Manufacturing requirements, end product objectives lay down the necessities for choosing a particular algorithm library. This article sheds a light on 6 key factors to consider while selecting any algorithm library. The intersection of AI and 3D printing has long been predicted. AI can analyze a 3D model and determine which parts will fail to form the part. 3D printers can also remove material from failed regions and use AI to create a different version. AI can even analyze a part’s geometry and identify a potential problem so an alternative way to create it can be found. The end result? A better-designed part with a high rate of success. Required functionality Once data has been fed and stored, methods for compressing this kind of data become highly interesting. The different algorithm libraries come up with their own set of functionalities. Ideally, functionalities are best when developed by in-house development team, to suit up in accordance with design objectives. It is a good practice to develop functionalities to address complex operations as well as simple tasks. It is also essential to develop functions which might be of need down the line. In the end, one’s objective defines what functionality laced algorithm library will be in use. Data Size and Performance A huge data can be challenging to handle and share between project partners. A large data is directly proportional to a large processing time. All the investments in hardware and quality connections will be of little use if one is using poor performing library. An algorithm library that allows for the process of multiple scans simultaneously has to be the primary preference. One should also have a good definition of the performance expectations from the library, depending on your application whether real time or batch mode. Processing speed Libraries that automate manual processes often emphasize on processing speed, delivering improvements to either the processing or modeling. This allows for faster innovation and often better, yet singular, products. As witnessed in the case of point cloud, the ability to generate scan trees after a dataset has been processed greatly improves efficiency. A system will smooth interface that permits fast execution, greatly reduces the effort and time taken to handle large datasets. Make versus Buy This situation drops in at the starting phases of processing. Let us take an example of point cloud libraries. Some of the big brands producing point cloud processing libraries are Autodesk, Bentley, Trimble, and Faro. However, most of these systems arrive as packages with 3D modelling, thereby driving up costs. If such is the case, it is advisable to form an in-house point cloud library that suits the necessities. Nowadays, many open source platforms give out PCL to get the job done which has proven to be quite beneficial. Commercial Terms The commercial aspect also plays a vital role in while choosing an algorithmic library. Whether to opt for single or recurring payment depends upon the volume and nature of the project. There are different models to choose from, if one decides to go with licensing a commercial library: A: Single payment: no per license fees, and an optional AMC B: Subscription Based: Annual subscription, without per license fees C: Hybrid: A certain down payment and per license revenue sharing Whatever option you select, make sure there is a clause in the legal agreement that caps the increase in the charges to a reasonable limit. Storage, Platforms and Support Storage has become less of an issue than what it was even a decade ago. Desktops and laptops with more than a terabyte of capacity are all over the market. Not every algorithm library requires heavy graphics. Investing in a quality graphics card is only important if your preferred library demands heavy graphic usage. That doesn’t mean investing in cheap hardware and storage systems available. A quality processor with lot of RAM is decent if the processing task is CPU and memory intensive. Another point to look into, is the type of platform or interface to be exact, the algorithm library supports. Varied requirements call for varied platforms such as Microsoft, Mac, and Linux. The usage, and licensing should be taken into account before selecting an interface. Last but not the least, it is to mention that the inputs from customers are highly significant and there has to be a robust support system to address any grievance from the customer side. Having a trained support staff or a customized automated support system must be given high priority.

Read MorePoints to consider while developing regression suite for CAD Projects

As the development of software makes its progress, there comes a stage where it needs to be evaluated before concluding it as the final output. This phase is usually known as testing. Testing detects and pinpoints the bugs and errors in the software, which eventually leads to rectification measures. There are instances where the rectifications bring in new errors, thus sending it back to another round of testing, hence creating a repeating loop. This repeated testing of an already tested application to detect errors resulting from changes has a term — Regression Testing. Regression testing is the selective retesting of an application to ensure that modifications carried out has not caused unintended effects in the previously working application. In simple words, to ensure all the old functionalities are still running correctly with new changes. This is a very common step in any software development process by testers. Regression testing is required in the following scenarios: Although, every software application requires regression testing, there are specific points that apply to different applications, based on their functioning and utility. Computer-Aided design or CAD software applications require specific points to keep in mind before undergoing regression testing. Regression testing can be broadly classified into two categories, UI Testing and Functionality Testing. UI testing stands for User Interface which is basically testing an applications graphical interface. Numerous testing tools are available for carrying out UI testing. However, functional testing presents situation for us. This content focuses on the points to take care while carrying out functional regression testing. Here are most effective points to consider for functional regression testing: Failure to address performance issues can hamper the functionality and success of your application, with unwelcome consequences for end users if your application doesn’t perform to expectations.

Read MoreWhat is Mesh and what are the types of Meshing

Table of content Types of Meshing Types of Meshing as per Grid Structure For those acquainted with mechanical design and reverse engineering, they can testify to the fact that the road to a new product design involves several steps. In reverse engineering, the summary of the entire process involves scanning, point cloud generation, meshing, computer-aided designing, prototyping and final production. This section covers a very crucial part of the process — Meshing or simply put, Mesh. To put a simple definition, a mesh is a network that constitutes of cells and points. Mesh generation is the practice of converting the given set of points into a consistent polygonal model that generates vertices, edges and faces that only meet at shared edges. It can have almost any shape in any size. Each cell of the mesh represents an individual solution, which when combined, results in a solution for the entire mesh. Mesh is formed of facets which are connected to each other topologically. The topology is created using following entities: These include: Types of Meshing Meshes are commonly classified into two divisions, Surface mesh and Solid mesh. Let us go through each section one by one. Surface MeshA surface mesh is a representation of each individual surface constituting a volume mesh. It consists of faces (triangles) and vertices. Depending on the pre-processing software package, feature curves may be included as well. Generally, a surface mesh should not have free edges and the edges should not be shared by two triangles. The surface should ideally contain the following qualities of triangle faces: The surface mesh generation process should be considered carefully. It has a direct influence on the quality of the resulting volume mesh and the effort it takes to get to this step. Solid Mesh Solid mesh, also known as volume mesh, is a polygonal representation of the interior volume of an object. There are three different types of meshing models that can be used to generate a volume mesh from a well prepared surface mesh. The three types of meshing models are as follows: Once the volume mesh has been built, it can be checked for errors and exported to other packages if desired. Types of Meshing as per Grid Structure A grid is a cuboid that covers entire mesh under consideration. Grid mainly helps in fast neighbor manipulation for a seed point. Meshes can be classified into two divisions from the grid perspective, namely Structured and Unstructured mesh. Let us have a look at each of these types. Structured Mesh Structured meshes are meshes which exhibits a well-known pattern in which the cells are arranged. As the cells are in a particular order, the topology of such mesh is regular. Such meshes enable easy identification of neighboring cells and points, because of their formation and structure. Structured meshes are applied over rectangular, elliptical, spherical coordinate systems, thus forming a regular grid. Structured meshes are often used in CFD. Unstructured Mesh Unstructured meshes, as the name suggests, are more general and can randomly form any geometry shape. Unlike structured meshes, the connectivity pattern is not fixed hence unstructured meshes do not follow a uniform pattern. However, unstructured meshes are more flexible. Unstructured meshes are generally used in complex mechanical engineering projects. Get access to our mesh tools library today Mesh Tools library offers a comprehensive set of operation for meshes for all your needs. Developed in C++, this library can be easily integrated in to your product. To learn more,

Read MorePoint Cloud Operations

No output is always perfect no matter how much the technology has evolved. Even though point cloud generation has eased up manufacturing process, it comes with its own anomaly. Generally, a point cloud data is accompanied by Noises and Outliers. Noises or Noisy data means the data information is contaminated by unwanted information; such unwanted information contributes to the impurity of the data while the underlying information still dominates. A noisy point cloud data can be filtered and the noise can be absolutely discarded to produce a much refined result. If we carefully examine the image below, it illustrates a point cloud data with noises. The surface area is usually filled with extra features which can be eliminated. After carrying out Noise Reduction process, the image below illustrates the outcome, which a lot smoother data without any unwanted elements. There are many algorithms and processes for noise reduction. Outlier, on the contrary, is a type of data which is not totally meaningless, but might turn out to be of interest. Outlier is a data value that differs considerably from the main set of data. It is mostly different from the existing group. Unlike noises, outliers are not removed outright but rather, it is put under analysis sometimes. The images below clearly portray what outliers are and how the point cloud data looks like once the outliers are removed. Point Cloud Decimation We have learned how a point cloud data obtained comes with noise and outliers and the methods to reduce them to make the data more executable for meshing. Point cloud data undergoes several operations to treat the anomalies existing within. Two of the commonly used operations are Point Cloud Decimation and Point Cloud Registration. A point cloud data consists of millions of small points, sometimes even more than what is necessary. Decimation is the process of discarding points from the data to improve performance and reduce usage of disk. Decimate point cloud command reduces the size of point clouds. The following example shows how a point cloud underwent decimation to reduce the excess points. Point Cloud Registration Scanning a commodity is not a one step process. A lot of time, scanning needs to be done separately from different angles to get views. Each of the acquired data view is called a dataset. Every dataset obtained from different views needs to be aligned together into a single point cloud data model, so that subsequent processing steps can be applied. The process of aligning various 3D point cloud data views into a complete point cloud model is known as registration. The purpose is to find the relative positions and orientations of the separately acquired views, such that the intersecting regions between them overlap perfectly. Take a look at the example given below. The car door data sets have been merged to get a complete model.

Read MorePoint Clouds | Point cloud formats and issues

Table of content Different 3D point cloud file formats Challenges with point cloud data Whether working on a renovation project or making information data about an as-built situation, it is understandable that the amount of time and energy spent analyzing the object/project can be pretty debilitating. Technical literature over the years has come up with several methods to make a precise approach. But inarguably, the most prominent method is the application of Point Clouds. 3D scanners gather point measurements from real-world objects or photos for a point cloud that can be translated to a 3D mesh or CAD model. But what is a Point Cloud? A standard definition of point clouds would be – A point cloud collection of data points defined by a given coordinates system. In a 3D coordinates system, for example, a point cloud may determine the shape of some real or created physical system. Point clouds are used to create 3D meshes and other models used in 3D modeling for various fields, including medical imaging, architecture, 3D printing, manufacturing, 3D gaming, and various virtual reality (VR) applications. When taken together, a point is identified by three coordinates that correlate to a precise point in space relative to the end of origin. Different 3D point cloud file formats Scanning a space or an object and bringing it into designated software lets us manipulate the scans further, and stitch them together, which can be exported to be converted into a 3D model. Now there are numerous file formats for 3D modeling. Different scanners yield raw data in different formats. One needs other processing software for such files, and each & every software has its exporting capabilities. Most software systems are designed to receive a large number of file formats and have flexible export options. This section will walk you through some known and commonly used file formats. Securing the data in these common formats enables using different software for processing without approaching a third-party converter. Standard point cloud file formatsOBJ: It is a simple data format that only represents 3D geometry, color, and texture. And this format has been adopted by a wide range of 3D graphics applications. It is commonly ASCII (American Standard Code for Information Interchange). ASCII is a rooted language based on a binary that conveys information using text. Standard ASCII represents each character as a 7-bit binary number. In reverse engineering, characters are the focus of data. E57: E57 is a compact and widely used open, vendor-neutral file format for point clouds, and it can also be used to store images and data produced by laser scanners and other 3D imaging systems. Its compact, binary-based format combines the speed and accessibility of ASCII with the precision and accuracy of binary. E57 files can also represent normals, colors, and scalar field intensity. However, E57 is not universally compatible across all software platforms. PLY: The full form of PLY is the polygon file format. PLY was built to store 3D data. It uses lists of nominally flat polygons to represent objects. The aim is to store a more significant number of physical elements. It makes the file format capable of representing transparency, color, texture, coordinates, and data confidence values. It is found in ASCII and binary versions. PTS, PTX & XYZ: These three formats are familiar and compatible with most BIM software. It conveys data in lines of text. They can be easily converted and manipulated. PCG, RCS & RCP: These three formats were developed by Autodesk to meet their software suite’s demands. RCS and RCP are relatively newer. Binary point cloud file formats are better than ASCII or repurposed file types. It is because the latter is more universal and has better long-term storage capabilities. However, this type of format can be used to create a backup of the original data. If you need to convert binary point cloud files to ASCII, back up your binary files before reformatting them. This way, you can restore your data if something goes wrong. Challenges with point cloud data In reverse engineering, you may encounter several Point Cloud issues. The laser scanning procedure has catapulted product design technology to new heights. 3D data capturing system has come a long way, and we can see where it’s headed. As more and more professionals and end users are using new devices, the scanner market is rising at a quick pace. But along with a positive market change, handling and controlling the data available becomes a vital issue. These problems can result in poor quality point cloud data. Read on to learn more about five key challenges professionals working with point cloud face are: Data Quality: You must identify the quality issues in reverse engineering point cloud data. Reconstruction algorithms differ in their behavior based on the properties of point clouds. Many studies have classified the properties of point clouds by their effect on algorithms. The quality of point clouds affects the precision of the reconstructions. Point clouds produced by body scanners typically contain many duplicated and overlapping patches. These features cause a large amount of noise and redundancy in the final data. Reconstruction of free-form surfaces requires the use of clean-up meshes. This data must be transformed into a model that is consistent and accurate. Fortunately, this task is possible with the help of cloud-to-cloud alignment tools. Data Format: New devices out there in the market yield back data in a new form. Often, one needs to bring together data in different formats from different devices against a compatible software tool. It presents a not-so-easy situation. Identifying the wrong point cloud file format in reverse engineering is of great importance. Often when a company attempts to perform reverse engineering, it will be presented with a point cloud file in the wrong format. It can cause problems because the data is not in the correct format. EMPA has made it their business to work with point clouds as soon as possible. However, this doesn’t mean that you should give up

Read MoreMesh Generation Algorithms

Table of content FAQs Algorithm methods for Quadrilateral or Hexahedral Mesh Algorithm methods for Triangular and Tetrahedral Mesh Mesh is the various aspects upon which a mesh can be classified. Mesh generation requires expertise in the areas of meshing algorithms, geometric design, computational geometry, computational physics, numerical analysis, scientific visualization, and software engineering to create a mesh tool. FAQs Over the years, mesh generation technology has evolved shoulder to shoulder with increasing hardware capability. Even with fully automatic mesh generators, there are many cases where the solution time is less than the meshing time. Meshing can be used for a wide array of applications; however, the principal application of interest is the finite element method. Surface domains are divided into triangular or quadrilateral elements, while volume domain is divided mainly into tetrahedral or hexahedral elements. A meshing algorithm can ideally define the shape and distribution of the elements. A vital step of the finite element method for numerical computation is mesh generation algorithms. A given domain is to be partitioned into simpler ‘elements.’ There should be a few elements, but some domain portions may need small elements to make the computation more accurate. All elements should be ‘well-shaped.’ Let us walk through different meshing algorithms based on two common domains: quadrilateral/hexahedral mesh and triangle/tetrahedral mesh. Algorithm methods for Quadrilateral or Hexahedral Mesh Grid-Based MethodThe grid-based method involves the following steps: Medial Axis MethodThe medial axis method involves an initial decomposition of the volumes. The technique involves a few steps as given below: Plastering methodPlastering is the process in which elements are placed, starting with the boundaries and advancing towards the center of the volume. The steps of this method are as follows: Whisker Weaving MethodWhisker weaving is based on the spatial twist continuum (STC) concept. The STC is the dual of the hexahedral mesh, represented by an arrangement of intersecting surfaces that bisects hexahedral elements in each direction. The whisker weaving algorithm can be explained in the following steps: Paving MethodThe paving method has the following steps to generate a quadrilateral mesh: Mapping Mesh MethodThe Mapped method for quad mesh generation involves the following steps: Algorithm methods for Triangular and Tetrahedral MeshQuadtree Mesh MethodThe quadtree mesh method recursively subdivided a square containing the geometric model until the desired resolution is reached. The steps for two-dimensional quadtree decomposition of a model are as follows: Delaunay Triangulation MethodA Delaunay triangulation for a set P of discrete points in the plane is a triangulation DT such that no points in P are inside the circum-circle of any triangles in DT. The steps of construction Delaunay triangulation are as follows: Delaunay Triangulation maximizes the minimum angle of all the triangle angles and tends to avoid skinny triangles. Advancing Front MethodAnother famous family of triangular and tetrahedral mesh generation algorithms is the advancing front or moving front method. The mesh generation process is explained in the following steps: Spatial Decomposition MethodThe steps for the spatial decomposition method are as follows: Sphere Packing MethodThe sphere packing method follows the given steps: Get access to our mesh tools library today Mesh Tools library offers a comprehensive set of operation for meshes for all your needs. Developed in C++, this library can be easily integrated in to your product. To learn more,

Read MoreMesh Quality

The quality of a mesh plays a significant role in the accuracy and stability of the numerical computation. Regardless of the type of mesh used in your domain, checking the quality of your mesh is a must. The ‘good meshes’ are the ones that produce results with fairly acceptable level of accuracy, considering that all other inputs to the model are accurate. While evaluating whether the quality of the mesh is sufficient for the problem under modeling, it is important to consider attributes such as mesh element distribution, cell shape, smoothness, and flow-field dependency. Element Distribution It is known that meshes are made of elements (vertices, edges and faces). The extent, to which the noticeable features such as shear layers, separated regions, shock waves, boundary layers, and mixing zones are resolved, relies on the density and distribution of mesh elements. In certain cases, critical regions with poor resolution can dramatically affect results. For example, the prediction of separation due to an adverse pressure gradient depends heavily on the resolution of the boundary layer upstream of the point of separation. Cell Quality The quality of a cell has a crucial impact on the accuracy of the entire mesh. The quality of cell is analyzed by the virtue of three aspects: Orthogonal quality, Aspect ratio and Skewness. Orthogonal Quality: An important indicator of mesh quality is an entity referred to as the orthogonal quality. The worst cells will have an orthogonal quality close to 0 and the best cells will have an orthogonal quality closer to 1. Aspect Ratio: Aspect ratio is an important indicator of mesh quality. It is a measure of stretching of the cell. It is computed as the ratio of the maximum value to the minimum value of any of the following distances: the normal distances between the cell centroid and face centroids and the distances between the cell centroid and nodes. Skewness: Skewness can be defined as the difference between the shape of the cell and the shape of an equilateral cell of equivalent volume. Highly skewed cells can decrease accuracy and destabilize the solution. Smoothness Smoothness redirects to truncation error which is the difference between the partial derivatives in the equations and their discrete approximations. Rapid changes in cell volume between adjacent cells results in larger truncation errors. Smoothness can be improved by refining the mesh based on the change in cell volume or the gradient of cell volume. Flow-Field Dependency The entire effects of resolution, smoothness, and cell shape on the accuracy and stability of the solution process is dependent upon the flow field being simulated. For example, skewed cells can be acceptable in benign flow regions, but they can be very damaging in regions with strong flow gradients. Correct Mesh Size Mesh size stands out as one of the most common problems to an equation. The bigger elements yield bad results. On the other hand, smaller elements make computing so long that it takes a long amount of time to get any result. One might never really know where exactly is the mesh size is on the scale. It is important to consider chosen analysis for different mesh sizes. As smaller mesh means a significant amount of computing time, it is important to strike a balance between computing time and accuracy. Too coarse mesh leads to erroneous results. In places where big deformations/stresses/instabilities take place, reducing element sizes allow for greatly increased accuracy without great expense in computing time.

Read More