Optimization Problems and Techniques

- Home

- Blog Details

- November 8 2019

- admin

When discussing the mathematics and computer science stream, optimization problems refer to finding the most appropriate solution out of all feasible solutions.

The optimization problem can be defined as a computational situation where the objective is to find the best of all possible solutions.

Using optimization to solve design problems provides unique insights into situations. The model can compare the current design to the best possible and includes information about limitations and implied costs of arbitrary rules and policy decisions. A well-designed optimization model can also aid in what-if analysis, revealing where improvements can be made or where trade-offs may need to be made. The application of optimization to engineering problems spans multiple disciplines.

Optimization is divided into different categories. The first is a statistical technique, while the second is a probabilistic method. A mathematical algorithm is used to evaluate a set of data models and choose the best solution. The problem domain is specified by constraints, such as the range of possible values for a function. A function evaluation must be performed to find the optimum solution. Optimal solutions will have a minimal error, so the minimum error is zero.

Optimization Problems

There are different types of optimization problems. A few simple ones do not require formal optimization, such as problems with apparent answers or with no decision variables. But in most cases, a mathematical solution is necessary, and the goal is to achieve optimal results. Most problems require some form of optimization. The objective is to reduce a problem’s cost and minimize the risk. It can also be multi-objective and involve several decisions.

There are three main elements to solve an optimization problem: an objective, variables, and constraints. Each variable can have different values, and the aim is to find the optimal value for each one. The purpose is the desired result or goal of the problem.

Let us walk through the various optimization problem depending upon varying elements.

Continuous Optimization versus Discrete Optimization

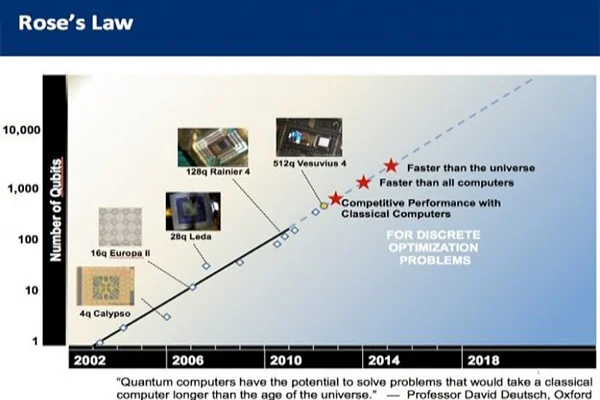

Models with discrete variables are discrete optimization problems, while models with continuous variables are continuous optimization problems. Constant optimization problems are easier to solve than discrete optimization problems. A discrete optimization problem aims to look for an object such as an integer, permutation, or graph from a countable set. However, with improvements in algorithms coupled with advancements in computing technology, there has been an increase in the size and complexity of discrete optimization problems that can be solved efficiently. It is to note that Continuous optimization algorithms are essential in discrete optimization because many discrete optimization algorithms generate a series of continuous sub-problems.

Unconstrained Optimization versus Constrained Optimization

An essential distinction between optimization problems is when problems have constraints on the variables and problems in which there are constraints on the variables. Unconstrained optimization problems arise primarily in many practical applications and the reformulation of constrained optimization problems. Constrained optimization problems appear in applications with explicit constraints on the variables. Constrained optimization problems are further divided according to the nature of the limitations, such as linear, nonlinear, convex, and functional smoothness, such as differentiable or non-differentiable.

None, One, or Many Objectives

Although most optimization problems have a single objective function, there have been peculiar cases when optimization problems have either — no objective function or multiple objective functions. Multi-objective optimization problems arise in engineering, economics, and logistics streams. Often, problems with multiple objectives are reformulated as single-objective problems.

Deterministic Optimization versus Stochastic Optimization

Deterministic optimization is where the data for the given problem is known accurately. But sometimes, the data cannot be known precisely for various reasons. A simple measurement error can be a reason for that. Another reason is that some data describe information about the future, hence cannot be known with certainty. In optimization under uncertainty, it is called stochastic optimization when the uncertainty is incorporated into the model.

Optimization problems are classified into two types:

Linear Programming:

In linear programming (LP) problems, the objective and all of the constraints are linear functions of the decision variables.

As all linear functions are convex, solving linear programming problems is innately easier than non- linear problems.

Quadratic Programming:

In the quadratic programming (QP) problem, the objective is a quadratic function of the decision variables, and the constraints are all linear functions of the variables.

A widely used Quadratic Programming problem is the Markowitz mean-variance portfolio optimization problem. The objective is the portfolio variance, and the linear constraints dictate a lower bound for portfolio return.

Linear and Quadratic programming

We all abide by optimization since it is a way of life. We all want to make the most of our available time and make it productive. Optimization finds its use from time usage to solving supply chain problems. Previously we have learned that optimization refers to finding the best possible solutions out of all feasible solutions. Optimization can be further divided into Linear programming and Quadratic programming. Let us take a walkthrough.

Linear Programming

Linear programming is a simple technique to find the best outcome or optimum points from complex relationships depicted through linear relationships. The actual relationships could be much more complicated, but they can be simplified into linear relationships.

Linear programming is a widely used in optimization for several reasons, which can be:

- In operation research, complex real-life problems can be expressed as linear programming problems.

- Many algorithms in specific optimization problems operate by solving Linear Programming problems as sub-problems.

- Many key concepts of optimization theory, such as duality, decomposition, convexity, and convexity generalizations, have been inspired by or derived from ideas of Linear programming.

- The early formation of microeconomics witnessed the usage of Linear programming, and it is still used in departments of planning, pro production, transportation, technology, etc.

Quadratic Programming

Quadratic programming is the method of solving a particular optimization problem, where it optimizes (minimizes or maximizes) a quadratic objective function subject to one or more linear constraints. Sometimes, quadratic programming can be referred to as nonlinear programming. The objective function in QP may carry bilinear or up to second-order polynomial terms. The constraints are usually linear and can be both equalities and inequalities. Quadratic Programming is widely used in optimization. Reasons being:

- Image and signal processing

- Optimization of financial portfolios

- Performing the least-squares method of regression

- Controlling scheduling in chemical plants

- Solving more complex non-linear programming problems

- Usage in operations research and statistical work

Types of Optimization Techniques

There are many types of mathematical and computational optimization techniques. An essential step in the optimization technique is to categorize the optimization model since the algorithms used for solving optimization problems are customized as per the nature of the problem.

Integer programming, for example, is a form of mathematical programming. This technique can be traced back to Archimedes, who first described the problem of determining the composition of a herd of cattle. Advances in computational codes and theoretical research led to its formal development. Listed below are some examples of problems that can be solved with integer programming.

Genetic algorithms (GANs) are another mathematical and computational optimization technique. These algorithms use the same mathematical principles to optimize complex systems. The main principle behind GAs is to minimize a linear objective function while minimizing the cost. This type of algorithm also relies on satisfying linear inequality constraints. On the other hand, nonlinear algorithms use real numbers and nonlinear functions. These algorithms are often more complex than the simplest version.

Different forms of genetic algorithms are widely used for calculating the optimal solution to a problem. Genetic algorithms, for example, have been widely used for decades. Genetic algorithms (GCRs), genetic algorithms (GMOs), and constrained optimization (LP) are two of the most commonly used methods. Genetic algorithms have also revolutionized the way algorithms solve optimization problems. They can help in maximizing the yields of a given product or service.

The term optimization is a synonym for computer programming. The field combines the study of optimization problems’ mathematical structure, the invention of methods for solving them, and implementation on computers. The complexity and size of optimization problems have increased with the development of faster computers. As a result, the development of these techniques has followed a similar pattern. It is particularly true of genetic algorithms, which have several biological and chemical research applications.

Sources

Yadav, Dr. Rakhee; Kamble, Mr. Sandeep; Rao, Dr. DS; Saradha, Dr. R.; Anusarkar, Ms, Gauri. “Data Mining and Business Intelligence.” mu.ac.in. https://mu.ac.in/wp-content/uploads/2022/05/MCA-Data-Mining-and- Business-Intelligence-2.pdf (accessed June 4, 2022).