Optimization Problems – Linear Programming and Quadratic Programming

- Home

- Blog Details

- June 8 2022

- admin

Students learn about optimization problems when they are given a problem to solve. Essentially, they are given a function that must be maximized or minimized. They use Calculus tools to find the critical points, which determine whether the function is a maximum or minimum. For example, they need to select the dimensions of a cylindrical can to hold V cm of liquid while having a diameter of 355 cm. Students must also consider the cost of metal and determine the dimensions that would maximize this function while minimizing the metal used in the can. Students usually start by sketching out situations, then use this knowledge to find the best solution.

There are different types of optimization problems. A few simple ones do not require formal optimization, such as problems with apparent answers or with no decision variables. But in most cases, a mathematical solution is necessary, and the goal is to achieve optimal results. Most problems require some form of optimization. The objective is to reduce a problem’s cost and minimize the risk. It can also be multi-objective and involve several decisions.

Linear Programming

In linear programming (LP) problems, the objective and all of the constraints are linear functions of the decision variables.

As all linear functions are convex, solving Linear programming problems is innately easier than non- linear problems. The problem posed in a linear program is called a linear programming problem. This type of problem has a set of constraints, typically inequalities. In some cases, the constraint may be a mixture of both types. The problem’s variables are Z, the objective function, and x, the decision vector. The constraints are defined by the formula gj(x), hj(x), and lj(x). The number of constraints is m1, and a linear program obtains the solution.

The most straightforward linear programs have hundreds or thousands of variables. The smallest integer programs, by contrast, have hundreds of variables. A Pentium-based PC is a good choice when performing linear programming. A Unix workstation will do the same task. Similarly, a Psion- based computer is an excellent choice for integer programming. The problem-solving capabilities of linear programs are enormous, and the number of applications is growing by the minute.

Another type of linear programming is the “barrier method,” which involves visiting points located in the interior of the feasible region. Both interior-point and barrier methods have been around for some time. The interior-point method is derived from nonlinear programming techniques developed in the 1960s. They were popularized by Fiacco and McCormick and first applied to linear programming in 1984.

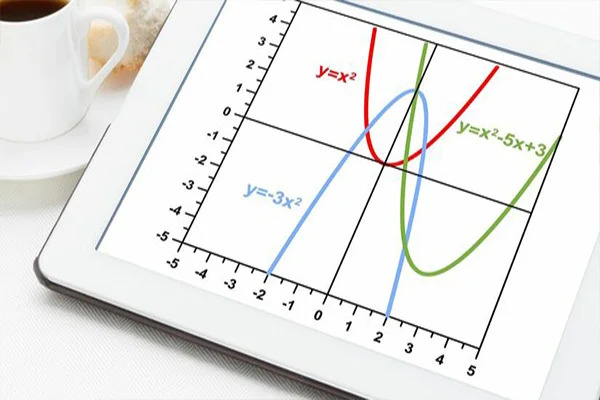

Quadratic Programming

In the quadratic programming (QP) problem, the objective is a quadratic function of the decision variables, and the constraints are all linear functions of the variables.

A widely used Quadratic Programming problem is the Markowitz mean-variance portfolio optimization problem. The objective is the portfolio variance, and the linear constraints dictate a lower bound for portfolio return.

The term quadratic programming is a generalization of the concept of linear least squares. It is often used to denote a method for solving the quadratic equation. There are many ways to solve quadratic equations. In this article, we will discuss two popular methods. The first method is known as linear programming and is used in solving problems involving the least squares.

The second method is known as modified-simplex and is used to solve nonlinear optimization problems. The second method, sequential quadratic programming, is used to solve more complex NLPs. The former involves solving individual QP subproblems and using an algorithm that combines them to solve more complicated problems. Sequential quadratic programming can solve problems with more than one objective function and be used in finance, statistics, and chemical production. A sequential quadratic programming algorithm variation is known as parallel-quadratic programming, which involves solving multiple-objective quadratic-linear problems simultaneously.

Sequential quadratic programming (SQP) became popular in the late 1970s and has evolved into numerous specific algorithms. The methods are based on solid theoretical foundations and are used to solve numerous practical problems. Many large-scale versions of SQP have been tested and have shown promising results. If you are interested in learning more about numerical algorithms and optimization, this book is for you. It will teach you everything you need to know about this technique.

To optimize a system, a mathematical model must be developed. The solution obtained should be an actual solution to the system’s problem. The degree of model representation determines how effective optimization techniques are. You can also find many examples of successful optimization projects on YouTube. It’s also a good idea to read books on quantitative optimization. It will help you decide which method is best for your particular situation.