Image Processing Algorithms

- Home

- Blog Details

- July 26 2022

- admin

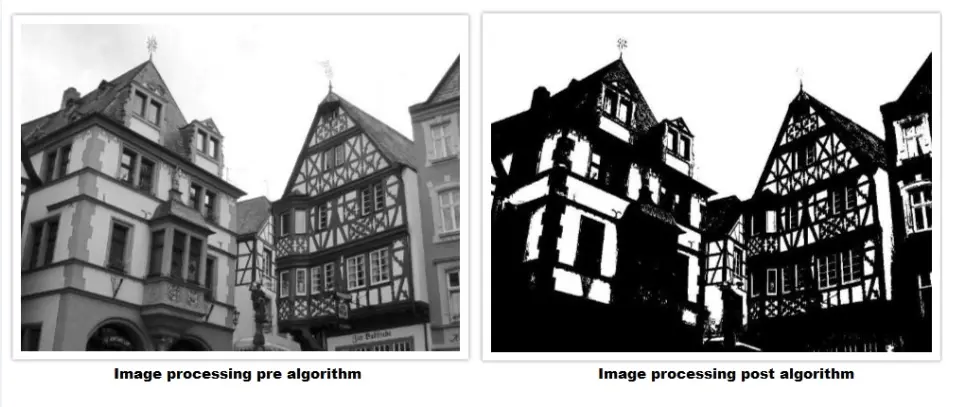

Image processing is the process of enhancing and extracting useful information from images. Images are treated as two-dimensional signals and inputs to this process are a photograph or video section. The input is an image, and the output may be an improved image or characteristics/features associated with the same.

There are many ways to process an image, but they all follow a similar pattern. First, an image’s red, green, and blue intensities are extracted. A new pixel is created from these intensities and inserted into a new, empty image at the same location as the original. In addition, gray scale pixels are created by averaging the intensities of all pixels. Afterward, they can be converted to black or white by using a threshold.

It is essential to know that image processing algorithms have the most significant role in digital image processing. Developers have been using and implementing multiple image processing algorithms to solve various tasks, including digital image detection, image analysis, image reconstruction, image restoration, image enhancement, image data compression, spectral image estimation, and image estimation. Sometimes, the algorithms can be straight off the book or a more customized amalgamated version of several algorithm functions.

In the case of full image capture, image processing algorithms are generally classified into:

- Low-level methods, noise removal, and color enhancement

- Medium-level techniques, like binarization and compression

- Higher-level techniques involve detection, segmentation, and recognition algorithms extracting semantic information from the captured data

Types of Image Processing Algorithms

There are different types of image processing algorithms. The techniques used to process images are image generation and image analysis. The basic idea behind this is converting an image from its original form into a digital image with a uniform layout. Some of the conventional image processing algorithms are as follows:

Contrast Enhancement algorithm

- Histogram equalization algorithm: Using the histogram to improve image contrast

- Adaptive histogram equalization algorithm: It is the histogram equalization that adapts to local changes in contrast

- Connected-component labeling algorithm: It is about finding and labeling disjoint regions

Dithering and half-toning algorithm

Dithering and half-toning include the following:

- Error diffusion algorithm

- Floyd–Steinberg dithering algorithm

- Ordered dithering algorithm

- Riemersma dithering algorithm

Elser difference-map algorithm

It is a search algorithm used for general constraint satisfaction problems. It was used initially for X- Ray diffraction microscopy.

Feature detection algorithm

Feature detection algorithm consists of:

- Marr–Hildreth algorithm: It is an early edge detection algorithm

- Canny edge detector algorithm: Canny edge detector is used for detecting a wide range of edges in images

- Generalized Hough transform algorithm

- Hough transform algorithm

- SIFT (Scale-invariant feature transform) algorithm: SIFT is an algorithm to identify and define local features in images

- SURF (Speeded Up Robust Features) algorithm: SURF is a robust local feature detector

- Richardson–Lucy deconvolution algorithm: This is an image de-blurring algorithm

Blind deconvolution algorithm:

Like Richardson–Lucy deconvolution algorithm, it is an image de- blurring algorithm when the point spread function is unknown.

Seam carving algorithm:

The seam carving algorithm is a content-aware image resizing algorithm.

Segmentation algorithm:

This particular algorithm parts a digital image into two or more regions. It consists of:

- GrowCut algorithm

- Random walker algorithm

- Region growing algorithm

- Watershed transformation algorithm

It is to note that apart from the algorithms mentioned above, industries also create customized algorithms to address their needs. They can be right from scratch or a combination of various algorithmic functions. It is safe to say that with the evolution of computer technology, image processing algorithms have provided sufficient opportunities for multiple researchers and developers to investigate, classify, characterize, and analyze various hordes of images.

- adminhttps://www.pre-scient.com/author/webwideit/

- adminhttps://www.pre-scient.com/author/webwideit/

- adminhttps://www.pre-scient.com/author/webwideit/

- adminhttps://www.pre-scient.com/author/webwideit/