Optimization Problems – Linear Programming and Quadratic Programming

Students learn about optimization problems when they are given a problem to solve. Essentially, they are given a function that must be maximized or minimized. They use Calculus tools to find the critical points, which determine whether the function is a maximum or minimum. For example, they need to select the dimensions of a cylindrical can to hold V cm of liquid while having a diameter of 355 cm. Students must also consider the cost of metal and determine the dimensions that would maximize this function while minimizing the metal used in the can. Students usually start by sketching out situations, then use this knowledge to find the best solution. There are different types of optimization problems. A few simple ones do not require formal optimization, such as problems with apparent answers or with no decision variables. But in most cases, a mathematical solution is necessary, and the goal is to achieve optimal results. Most problems require some form of optimization. The objective is to reduce a problem’s cost and minimize the risk. It can also be multi-objective and involve several decisions. Linear Programming In linear programming (LP) problems, the objective and all of the constraints are linear functions of the decision variables. As all linear functions are convex, solving Linear programming problems is innately easier than non- linear problems. The problem posed in a linear program is called a linear programming problem. This type of problem has a set of constraints, typically inequalities. In some cases, the constraint may be a mixture of both types. The problem’s variables are Z, the objective function, and x, the decision vector. The constraints are defined by the formula gj(x), hj(x), and lj(x). The number of constraints is m1, and a linear program obtains the solution. The most straightforward linear programs have hundreds or thousands of variables. The smallest integer programs, by contrast, have hundreds of variables. A Pentium-based PC is a good choice when performing linear programming. A Unix workstation will do the same task. Similarly, a Psion- based computer is an excellent choice for integer programming. The problem-solving capabilities of linear programs are enormous, and the number of applications is growing by the minute. Another type of linear programming is the “barrier method,” which involves visiting points located in the interior of the feasible region. Both interior-point and barrier methods have been around for some time. The interior-point method is derived from nonlinear programming techniques developed in the 1960s. They were popularized by Fiacco and McCormick and first applied to linear programming in 1984. Quadratic Programming In the quadratic programming (QP) problem, the objective is a quadratic function of the decision variables, and the constraints are all linear functions of the variables. A widely used Quadratic Programming problem is the Markowitz mean-variance portfolio optimization problem. The objective is the portfolio variance, and the linear constraints dictate a lower bound for portfolio return. The term quadratic programming is a generalization of the concept of linear least squares. It is often used to denote a method for solving the quadratic equation. There are many ways to solve quadratic equations. In this article, we will discuss two popular methods. The first method is known as linear programming and is used in solving problems involving the least squares. The second method is known as modified-simplex and is used to solve nonlinear optimization problems. The second method, sequential quadratic programming, is used to solve more complex NLPs. The former involves solving individual QP subproblems and using an algorithm that combines them to solve more complicated problems. Sequential quadratic programming can solve problems with more than one objective function and be used in finance, statistics, and chemical production. A sequential quadratic programming algorithm variation is known as parallel-quadratic programming, which involves solving multiple-objective quadratic-linear problems simultaneously. Sequential quadratic programming (SQP) became popular in the late 1970s and has evolved into numerous specific algorithms. The methods are based on solid theoretical foundations and are used to solve numerous practical problems. Many large-scale versions of SQP have been tested and have shown promising results. If you are interested in learning more about numerical algorithms and optimization, this book is for you. It will teach you everything you need to know about this technique. To optimize a system, a mathematical model must be developed. The solution obtained should be an actual solution to the system’s problem. The degree of model representation determines how effective optimization techniques are. You can also find many examples of successful optimization projects on YouTube. It’s also a good idea to read books on quantitative optimization. It will help you decide which method is best for your particular situation.

Read MoreOptimization Problems and Techniques

Table of content Optimization Problems Linear and Quadratic programming Types of Optimization Techniques When discussing the mathematics and computer science stream, optimization problems refer to finding the most appropriate solution out of all feasible solutions. The optimization problem can be defined as a computational situation where the objective is to find the best of all possible solutions. Using optimization to solve design problems provides unique insights into situations. The model can compare the current design to the best possible and includes information about limitations and implied costs of arbitrary rules and policy decisions. A well-designed optimization model can also aid in what-if analysis, revealing where improvements can be made or where trade-offs may need to be made. The application of optimization to engineering problems spans multiple disciplines. Optimization is divided into different categories. The first is a statistical technique, while the second is a probabilistic method. A mathematical algorithm is used to evaluate a set of data models and choose the best solution. The problem domain is specified by constraints, such as the range of possible values for a function. A function evaluation must be performed to find the optimum solution. Optimal solutions will have a minimal error, so the minimum error is zero. Optimization Problems There are different types of optimization problems. A few simple ones do not require formal optimization, such as problems with apparent answers or with no decision variables. But in most cases, a mathematical solution is necessary, and the goal is to achieve optimal results. Most problems require some form of optimization. The objective is to reduce a problem’s cost and minimize the risk. It can also be multi-objective and involve several decisions. There are three main elements to solve an optimization problem: an objective, variables, and constraints. Each variable can have different values, and the aim is to find the optimal value for each one. The purpose is the desired result or goal of the problem. Let us walk through the various optimization problem depending upon varying elements. Continuous Optimization versus Discrete Optimization Models with discrete variables are discrete optimization problems, while models with continuous variables are continuous optimization problems. Constant optimization problems are easier to solve than discrete optimization problems. A discrete optimization problem aims to look for an object such as an integer, permutation, or graph from a countable set. However, with improvements in algorithms coupled with advancements in computing technology, there has been an increase in the size and complexity of discrete optimization problems that can be solved efficiently. It is to note that Continuous optimization algorithms are essential in discrete optimization because many discrete optimization algorithms generate a series of continuous sub-problems. Unconstrained Optimization versus Constrained Optimization An essential distinction between optimization problems is when problems have constraints on the variables and problems in which there are constraints on the variables. Unconstrained optimization problems arise primarily in many practical applications and the reformulation of constrained optimization problems. Constrained optimization problems appear in applications with explicit constraints on the variables. Constrained optimization problems are further divided according to the nature of the limitations, such as linear, nonlinear, convex, and functional smoothness, such as differentiable or non-differentiable. None, One, or Many Objectives Although most optimization problems have a single objective function, there have been peculiar cases when optimization problems have either — no objective function or multiple objective functions. Multi-objective optimization problems arise in engineering, economics, and logistics streams. Often, problems with multiple objectives are reformulated as single-objective problems. Deterministic Optimization versus Stochastic Optimization Deterministic optimization is where the data for the given problem is known accurately. But sometimes, the data cannot be known precisely for various reasons. A simple measurement error can be a reason for that. Another reason is that some data describe information about the future, hence cannot be known with certainty. In optimization under uncertainty, it is called stochastic optimization when the uncertainty is incorporated into the model. Optimization problems are classified into two types: Linear Programming: In linear programming (LP) problems, the objective and all of the constraints are linear functions of the decision variables. As all linear functions are convex, solving linear programming problems is innately easier than non- linear problems. Quadratic Programming: In the quadratic programming (QP) problem, the objective is a quadratic function of the decision variables, and the constraints are all linear functions of the variables. A widely used Quadratic Programming problem is the Markowitz mean-variance portfolio optimization problem. The objective is the portfolio variance, and the linear constraints dictate a lower bound for portfolio return. Linear and Quadratic programming We all abide by optimization since it is a way of life. We all want to make the most of our available time and make it productive. Optimization finds its use from time usage to solving supply chain problems. Previously we have learned that optimization refers to finding the best possible solutions out of all feasible solutions. Optimization can be further divided into Linear programming and Quadratic programming. Let us take a walkthrough. Linear Programming Linear programming is a simple technique to find the best outcome or optimum points from complex relationships depicted through linear relationships. The actual relationships could be much more complicated, but they can be simplified into linear relationships.Linear programming is a widely used in optimization for several reasons, which can be: Quadratic Programming Quadratic programming is the method of solving a particular optimization problem, where it optimizes (minimizes or maximizes) a quadratic objective function subject to one or more linear constraints. Sometimes, quadratic programming can be referred to as nonlinear programming. The objective function in QP may carry bilinear or up to second-order polynomial terms. The constraints are usually linear and can be both equalities and inequalities. Quadratic Programming is widely used in optimization. Reasons being: Types of Optimization Techniques There are many types of mathematical and computational optimization techniques. An essential step in the optimization technique is to categorize the optimization model since the algorithms used for solving optimization problems are customized as per the nature of the problem. Integer programming, for example, is a form of mathematical programming. This technique can be traced back to Archimedes, who first described the problem of determining the composition of a herd of cattle. Advances in computational codes and theoretical research

Read MoreMesh Quality

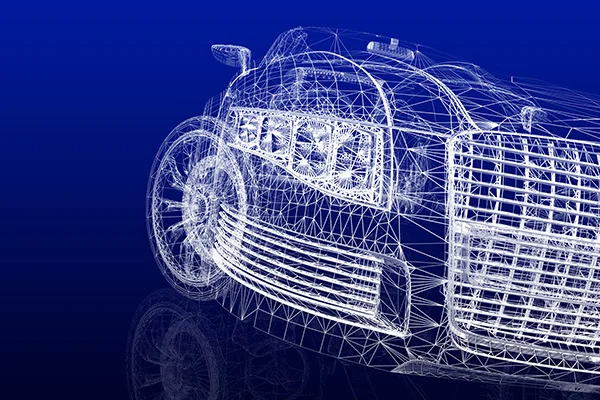

The quality of a mesh plays a significant role in the accuracy and stability of the numerical computation. Regardless of the type of mesh used in your domain, checking the quality of your mesh is a must. The ‘good meshes’ are the ones that produce results with fairly acceptable level of accuracy, considering that all other inputs to the model are accurate. While evaluating whether the quality of the mesh is sufficient for the problem under modeling, it is important to consider attributes such as mesh element distribution, cell shape, smoothness, and flow-field dependency. Element Distribution It is known that meshes are made of elements (vertices, edges and faces). The extent, to which the noticeable features such as shear layers, separated regions, shock waves, boundary layers, and mixing zones are resolved, relies on the density and distribution of mesh elements. In certain cases, critical regions with poor resolution can dramatically affect results. For example, the prediction of separation due to an adverse pressure gradient depends heavily on the resolution of the boundary layer upstream of the point of separation. Cell Quality The quality of a cell has a crucial impact on the accuracy of the entire mesh. The quality of cell is analyzed by the virtue of three aspects: Orthogonal quality, Aspect ratio and Skewness. Orthogonal Quality: An important indicator of mesh quality is an entity referred to as the orthogonal quality. The worst cells will have an orthogonal quality close to 0 and the best cells will have an orthogonal quality closer to 1. Aspect Ratio: Aspect ratio is an important indicator of mesh quality. It is a measure of stretching of the cell. It is computed as the ratio of the maximum value to the minimum value of any of the following distances: the normal distances between the cell centroid and face centroids and the distances between the cell centroid and nodes. Skewness: Skewness can be defined as the difference between the shape of the cell and the shape of an equilateral cell of equivalent volume. Highly skewed cells can decrease accuracy and destabilize the solution. Smoothness Smoothness redirects to truncation error which is the difference between the partial derivatives in the equations and their discrete approximations. Rapid changes in cell volume between adjacent cells results in larger truncation errors. Smoothness can be improved by refining the mesh based on the change in cell volume or the gradient of cell volume. Flow-Field Dependency The entire effects of resolution, smoothness, and cell shape on the accuracy and stability of the solution process is dependent upon the flow field being simulated. For example, skewed cells can be acceptable in benign flow regions, but they can be very damaging in regions with strong flow gradients. Correct Mesh Size Mesh size stands out as one of the most common problems to an equation. The bigger elements yield bad results. On the other hand, smaller elements make computing so long that it takes a long amount of time to get any result. One might never really know where exactly is the mesh size is on the scale. It is important to consider chosen analysis for different mesh sizes. As smaller mesh means a significant amount of computing time, it is important to strike a balance between computing time and accuracy. Too coarse mesh leads to erroneous results. In places where big deformations/stresses/instabilities take place, reducing element sizes allow for greatly increased accuracy without great expense in computing time.

Read More