Evaluating ROI: Measuring the Impact of Additive Manufacturing Implementation

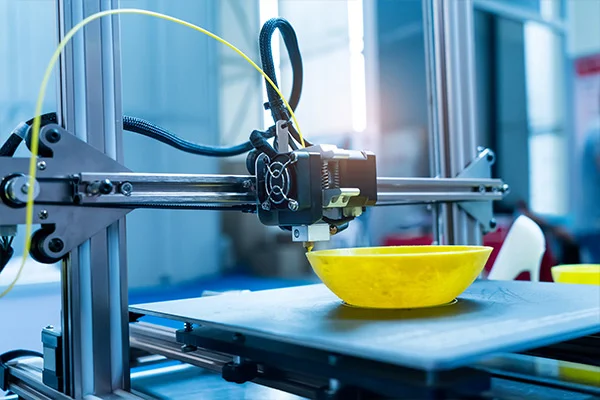

Table of content Introduction Understanding Additive Manufacturing Challenges and Considerations in Additive Manufacturing Implementation Future Trends and the Evolving Landscape of Additive Manufacturing ROI Conclusion Introduction The ability to produce complicated parts with remarkable speed and efficiency thanks to additive manufacturing, often known as 3D printing, has completely changed the industrial sector. Businesses are becoming more concerned with assessing the return on investment (ROI) related to using additive manufacturing solutions as the technology develops and becomes more widely used. This article looks at several aspects of additive manufacturing implementation and how organizations may evaluate their ROI effectively. Understanding Additive Manufacturing Additive manufacturing is a process that builds three-dimensional objects by adding successive layers of material based on a digital model. This technology offers numerous advantages over traditional manufacturing methods, including reduced lead times, lower costs, design flexibility, and the ability to produce complex geometries. These benefits have attracted a wide range of industries, including aerospace, automotive, healthcare, and consumer products, to incorporate additive manufacturing into their operations. Measuring ROI in Additive Manufacturing Assessing the costs, advantages, and dangers of implementing additive manufacturing is necessary to calculate the return on investment. When assessing the effects of additive manufacturing on your company, keep the following points in mind: Cost Analysis Begin by analyzing the costs associated with implementing additive manufacturing. This includes the cost of acquiring 3D printers, software, materials, and training. Additionally, consider the costs of maintaining and operating the equipment, as well as any required facility modifications. You can estimate the financial impact by comparing these costs with the potential savings due to additive manufacturing. Operational Efficiency Additive manufacturing can considerably increase operational efficiency because lead times are shortened and supply chains are made simpler. Analyze the potential for time and money savings in your production processes by using additive manufacturing. One method to save assembly stages and material waste is to combine several pieces into a single 3D-printed component. Design Optimization Another key advantage of additive manufacturing is design flexibility. Traditional manufacturing methods often have limitations on complex geometries, but additive manufacturing allows for intricate designs with minimal constraints. Assess how this design freedom can enhance product performance, reduce material usage, and enable innovation. Consider the potential cost savings and revenue generation resulting from optimized designs. Customization and Personalization Additive manufacturing enables customization and personalization at scale. Evaluate the market demand for customized products and assess how additive manufacturing can help meet these requirements. Customized products often command higher prices, leading to increased revenue potential. Supply Chain Optimization Due to its ability to support decentralized production and on-demand manufacturing, additive manufacturing has the potential to upend conventional supply chains. Consider the effects of localized manufacturing, lower transportation costs, and advantages of inventory management. Take into account the potential reduction in lead times and the capacity to react swiftly to demand fluctuations. Risk Management Like any investment, additive manufacturing comes with inherent risks. Evaluate the potential risks associated with implementing the technology, such as operational challenges, quality control, intellectual property concerns, and regulatory compliance. Mitigate these risks by developing robust strategies and considering them in your ROI calculations. Quantitative and Qualitative Factors Take into account both quantitative and qualitative considerations when assessing the ROI of additive manufacturing. Cost reductions, income growth, increased productivity, and less waste are examples of quantitative metrics. Improved consumer happiness, increased market competition, and the capacity to develop novel products are examples of qualitative metrics. Market Expansion and New Business Opportunities Additive manufacturing opens up new business opportunities and market expansion possibilities. Evaluate how implementing this technology can allow you to enter new markets, cater to niche customer segments, or offer unique products and services. Consider the potential revenue growth from tapping into previously unexplored markets or creating innovative business models based on additive manufacturing capabilities. Challenges and Considerations in Additive Manufacturing Implementation There are certain difficulties and things to think about in additive manufacturing implementation. Despite the fact that the technology has many advantages, businesses should be aware of any obstacles that could reduce the overall return on investment. Consider the following major difficulties: Initial Investment The initial investment required for additive manufacturing implementation can be substantial. Organizations need to assess their financial capabilities and determine if they have the necessary budget to acquire the equipment, software, and training required for successful implementation. Conduct a thorough cost analysis and consider alternative financing options if needed. Material Selection and Quality Control Additive manufacturing relies on various materials, each with its own unique properties. Organizations must carefully evaluate material options to ensure they are suitable for the intended applications. Maintaining consistent quality control throughout the additive manufacturing process is crucial to avoid defects or deviations in the final products. Intellectual Property and Data Security Additive manufacturing involves the use of digital models and designs, which can be susceptible to intellectual property theft or unauthorized replication. Organizations must implement robust security measures to protect their intellectual property and ensure data integrity throughout the additive manufacturing workflow. Future Trends and the Evolving Landscape of Additive Manufacturing ROI As additive manufacturing continues to evolve, several future trends will shape the ROI landscape. Understanding these trends can help organizations assess the long-term impact of additive manufacturing implementation. Here are some noteworthy trends: Conclusion As additive manufacturing continues to reshape the manufacturing landscape, evaluating the ROI of additive manufacturing implementation is crucial for organizations considering adopting this technology. It involves considering the challenges and considerations, examining real-world case studies, and understanding future trends in the additive manufacturing landscape. By carefully assessing the costs, benefits, and risks associated with additive manufacturing implementation, organizations can make informed decisions that maximize the ROI and leverage the full potential of this transformative technology. With ongoing advancements and increasing adoption, additive manufacturing will continue to reshape industries, drive innovation, and deliver substantial returns for forward-thinking organizations. Interested in transforming your manufacturing processes? Discover the impact of additive manufacturing implementation on your business. Evaluate ROI, explore case studies, and stay ahead of the evolving landscape. Embrace the

Read MoreOptimising Additive Manufacturing: Unleashing the Power of Slicing Algorithms

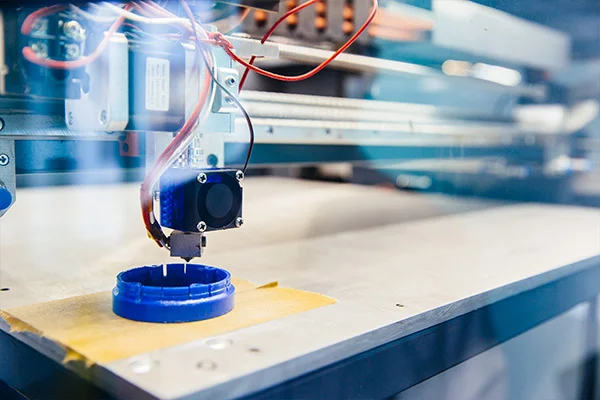

Table of Content Introduction Slicing algorithms: An understanding Types of Slicing Algorithms Optimisation Techniques Conclusion Introduction The industrial sector has transformed because of additive manufacturing, often known as 3D printing, which makes it possible to produce intricate geometries more effectively and affordably. The slicing algorithm is an essential part of the additive manufacturing process. Slicing algorithms help the printer deposit materials layer by layer by decomposing a 3D model into a sequence of 2D levels. The significance, varieties, and effects of additive manufacturing slicing algorithms on the calibre, speed, and accuracy of the manufacturing process will all be explored in this article. We will also review the optimisation methods used to improve slicing algorithm performance for better additive manufacturing results Slicing algorithms: An understanding At its core, additive manufacturing involves the layer-by-layer construction of a physical object based on a digital model. The slicing algorithm serves as the bridge between the digital model and the material creation. It takes the 3D model and slices it into a series of 2D layers, then translates it into machine instructions for the 3D printer. Each layer is a horizontal cross-section of the object that the printer will fabricate. Layer thickness, infill density, support structures, and printing path are just a few of the crucial factors slicing algorithms consider. While the infill density specifies how much material is utilised to fill the interior of the item, the layer thickness controls the print’s vertical resolution. Support structures are created to stabilise overhanging elements during printing, and the printing path defines the order in which the printer deposits material. Types of Slicing Algorithms In additive manufacturing, a variety of slicing algorithms are used. Each method has advantages and disadvantages, and the choice of one depends on the particular specifications of the printed product. Several popular slicing algorithms are listed below: Uniform Slicing Collect knowledge from subject matter experts, books, journals, and other sources, then organise it so a computer can process it. Adaptive Slicing This algorithm dynamically adjusts the layer thickness based on the object’s geometry. When there are smooth slopes, the layer thickness increases; when there are sharp features, the thickness decreases. Adaptive slicing improves The staircase effect, and the surface quality is lessened. Tree-like Slicing The printing path is optimised via tree-like slicing methods. These algorithms construct a hierarchical structure as opposed to strictly adhering to a layer-by-layer method, enabling the printer to optimise its movements and reduce travel times. As a result, printing takes less time and is more effective overall. Non-Manifold Slicing Non-manifold slicing algorithms can effectively handle complex geometries featuring overlapping surfaces and non-manifold edges, which is crucial for ensuring successful 3D printing. These algorithms play a vital role in detecting and rectifying any inconsistencies in the 3D model, guaranteeing optimal printing results. By addressing intricate geometries and resolving issues, non-manifold slicing algorithms enable the seamless translation of digital models into accurate physical representations. Optimisation Techniques The potential of additive manufacturing slicing algorithms has been optimised using various techniques established by researchers and engineers. These methods are intended to improve the manufacturing process’s precision, speed, and quality. Here are a few noteworthy optimisation techniques: Adaptive Layer Thickness As was already said, adaptive slicing modifies layer thickness dependent on the object’s geometry. This feature enables the printing process to produce higher resolution in regions requiring finer details and faster printing speeds in parts with less intricate elements. Infill Optimisation The inside framework of the printed object is referred to as infill. Optimising the infill pattern and density can considerably affect the item’s strength, weight, and material utilisation. Advanced slicing algorithms enable the custom design of infill patterns like honeycomb, grid, or gyroid, each offering a different trade-off between strength and material usage. Support Structure Generation Support structures are frequently needed during printing to prevent the collapse or deformation of overhanging elements or complex shapes. Slicing optimised algorithms can intelligently create support structures only when required, minimising material waste and post-processing work. Printing Path Optimisation Printing speed and overall print quality can be impacted by the order in which the printer puts the materials. Optimisation approaches aim to reduce retraction movements, travel distances, and the printing route to eliminate pointless pauses and starts. The printing time can be decreased through printing path optimisation, increasing productivity and efficiency. Intelligent Cooling Strategies In additive manufacturing, cooling is essential, especially for materials prone to warping or distortion. By altering the printing speed, fan speed, and layer dwell time, slicing algorithms can incorporate adaptive cooling strategies to maximise cooling between layers. This raises dimensional accuracy while reducing thermal stress. Multi-Material Printing Multi-material prints are possible with several additive manufacturing techniques. The exact control of material deposition and transition points made possible by optimised slicing algorithms ensures smooth integration of various materials and minimises flaws or weak interfaces. Post-Processing Considerations To make removal easier or reduce the need for post-processing, slicing algorithms can also optimise the design of support structures. In the final stages of additive manufacturing, this saves time and effort. Conclusion The core of 3D printing is additive manufacturing slicing algorithms, which make it possible to turn digital models into actual products. The choice and optimisation of slicing algorithms substantially impact the quality, speed, and accuracy of additive manufacturing. Engineers and researchers can maximise the potential of additive manufacturing and open new doors in design and manufacturing by using adaptive layer thickness, infill optimisation, support structure generation, printing path optimisation, intelligent cooling strategies, multi-material printing, and post-processing considerations. The advancement of more sophisticated slicing algorithms and optimisation methods will result in additional advancements in print quality, effectiveness, and material utilisation as additive manufacturing progresses. Manufacturers can fully utilise the capabilities of additive manufacturing and promote innovation across various industries by being on the cutting edge of these developments. Ready to optimise your additive manufacturing process? Discover the power of Prescient’s advanced slicing algorithms and optimisation techniques. Contact us today to unlock the full potential of 3D printing and revolutionise your manufacturing capabilities.

Read MoreThe Future of CAD: Trends and Innovations in Product Development

Table of Content Introduction What exactly is Computer-Aided Design? Trends and Innovations in Product Development Conclusion Introduction Cutting-edge trends and breakthrough technology are driving the future of CAD, which is poised to revolutionize product development. CAD systems are getting more sophisticated and intuitive as artificial intelligence and machine learning become more prevalent, allowing designers to easily produce complicated designs. Virtual and augmented reality is changing how products are visualized and verified, allowing for immersive and interactive design experiences. The vision-based inspection system is one remarkable breakthrough in this developing landscape, utilizing the power of computer vision to automate quality control procedures, ensuring precise and error-free manufacturing. The future of CAD is one of boundless possibilities, ushering in a new era of product development. In this article, we will examine what computer-aided design (CAD) is, the new trends and breakthroughs influencing CAD’s future and how they affect the creation of new products. What exactly is Computer-Aided Design? Computer-Aided Design (CAD) is a game-changing technology that allows designers and engineers to realise their creative concepts. It is a digital design technique that substitutes old manual drawing methods to create precise, accurate, and efficient 2D and 3D models. CAD software offers a strong collection of tools that allow designers to easily see, analyse, and alter their designs. Complex geometries can be easily changed, prototypes can be simulated and tested, and designs may be optimized for manufacturing using CAD. CAD transforms the design workflow, increasing productivity, collaboration, and overall product quality. Trends and Innovations in Product Development Product creation is undergoing a transition as a result of technological breakthroughs in the quickly changing environment of today. The industry is undergoing groundbreaking breakthroughs transforming how we produce and optimize products, from cloud-based collaboration to AI-driven design. Let’s explore the latest trends and innovations revolutionizing the industry. Cloud-Based CAD The move to cloud-based solutions is one of the most important developments in CAD. Comparing cloud-based CAD platforms to traditional desktop software reveals several benefits. They enable real-time collaboration, so several team members can simultaneously work on the same project. Generative Design Generative design is a cutting-edge methodology that uses artificial intelligence (AI) algorithms to provide a variety of design possibilities based on a predetermined set of criteria. The software may explore various alternatives and produce optimised solutions by providing design goals and limitations. With the help of this technology, designers can investigate unusual concepts and find creative solutions that could not have been considered otherwise. The development of new products can move more quickly thanks to generative design, which can also cut down on material waste and improve performance. Integration with Virtual Reality (VR) and Augmented Reality (AR) Technologies for virtual reality (VR) and augmented reality (AR) have become very popular in recent years. By enabling designers and engineers to engage with virtual models more naturally and realistically, these immersive technologies add new dimensions to CAD. Insights into ergonomics, spatial relationships, and overall aesthetics can be gained by allowing users to visualise and experience a design in a simulated environment using virtual reality (VR). Contrarily, AR superimposes virtual models over the real world to enable real-time design changes and the ability to see how a product fits into its surroundings. The design review process might be revolutionized, cooperation could be improved, and decision-making could be enhanced by integrating CAD with VR and AR. Parametric and Feature-Based Modeling For many years, parametric modelling has been a mainstay of CAD software. It enables effective adjustments and updates by allowing designers to specify relationships between various design elements. By incorporating several characteristics, feature-based modelling advances parametric modelling by capturing design intent. Rapid design iterations are possible by changing or suppressing these aspects. Further developments in parametric and feature-based modelling will be made in CAD, making it simpler for designers to construct complicated designs and modify them in response to shifting requirements. Additive Manufacturing (3D Printing) The field of additive manufacturing, also called 3D printing, has expanded rapidly in recent years. CAD plays a significant part in this process by supplying digital models that are transformed into actual products. More additive manufacturing technologies will be integrated with CAD in the future, allowing designers to optimise designs specifically for 3D printing. This includes lattice structures, lightweight constructions, and intricate geometries that were previously impossible or difficult to create using conventional techniques. Numerous industries, including aerospace, healthcare, and automotive, will continue to transform due to the convergence of CAD and additive manufacturing. Artificial Intelligence and Machine Learning Various industries, including CAD, are experiencing radical change due to artificial intelligence (AI) and machine learning (ML). Large-scale design data analysis, pattern recognition, and recommendation generation are all capabilities of AI algorithms. This can help designers make wise judgements and enhance the entire design process. Predictive models for design optimization, cost estimate, and performance analysis can also be created using machine learning algorithms trained on already-existing design data. By integrating AI and ML into CAD software, designers can take advantage of data-driven insights, automate tedious activities, and improve creativity. Internet of Things (IoT) Integration A network of networked devices that gather and share data is known as the Internet of Things (IoT). CAD software will progressively interface with IoT platforms as IoT technology develops. Thanks to this connection, designers will be able to construct linked, smart items. Engineers may collect real-time data on product performance, usage trends, and maintenance requirements by embedding sensors and connectivity into their designs. Then, with the help of this information, future designs, user experiences, and preventive maintenance may all be enhanced. Conclusion The development of products could be drastically altered thanks to CAD. CAD will become more potent, available, and user-friendly as technology advances, enabling designers and engineers to produce cutting-edge, environmentally responsible goods in a market that is getting more competitive. For organizations looking to maintain their position at the forefront of product development in the years to come, embracing these trends and utilizing CAD skills will be essential. Experience the future of CAD with Prescient: Unlock innovative

Read MoreKBE Methodology for Product Design and Development

Table of content Introduction The KBE Methodology: An Overview The Benefits of KBE in Product Design and Development Application of KBE in Product Design and Development Challenges and Considerations Overcoming Resistance to Automation The Future of Manufacturing and Vision-Based Inspection Conclusion Introduction Businesses continuously seek ways to enhance their product design and development processes in today’s fast-paced, cutthroat business environment. Knowledge-Based Engineering (KBE) is one methodology that has drawn much interest. KBE streamlines the product development lifecycle and boosts overall effectiveness by combining technical expertise, CAD, and AI approaches. This article investigates the KBE approach, its advantages, and how it might be used to build new products. The KBE Methodology: An Overview Knowledge-Based Engineering (KBE) incorporates knowledge from diverse fields into computer- based systems. Engineers can produce novel items quickly because of the automation of design and engineering procedures made possible by these systems. KBE uses the capabilities of expert systems, rule-based reasoning, and artificial intelligence to capture and apply engineering knowledge over a product’s lifecycle. The Benefits of KBE in Product Design and Development The Knowledge-Based Engineering (KBE) methodology can be adapted to create products with various benefits. KBE enables organisations to accomplish their objectives more quickly and successfully. Let’s explore KBE’s advantages, revolutionising how things are created, developed, and released onto the market. Improved Efficiency and Speed KBE makes it possible to automate time-consuming, repetitive design procedures. Engineers can build designs, run simulations, and assess options fast by utilising pre-existing knowledge and regulations. This quickens the product development process, cutting down on time to market and giving the company a competitive edge. Enhanced Product Quality KBE systems enforce design limitations and regulations, reducing mistake rates and guaranteeing adherence to industry standards. KBE lessens the possibility of design mistakes by integrating technical expertise into the design process, improving the overall quality and dependability of the finished product. Increased Collaboration and Reusability KBE encourages communication and cooperation between engineering teams. All stakeholders will easily access design knowledge once it has been collected and organised in a central repository. This promotes reusability and enables engineers to draw on their prior expertise and successful ideas. Cost Reduction KBE’s automated features reduce manual iterations, reducing labour expenses and associated overhead. KBE aids in avoiding expensive design revisions at later phases of product development by minimising mistakes and optimising design decisions. Further lowering costs is made possible by the reuse of information and design elements. Application of KBE in Product Design and Development There are many opportunities when Knowledge-Based Engineering (KBE) is used in the design and development of products. KBE enables engineers to optimise designs, guarantee compliance with standards, and streamline the overall development workflow by interacting with CAD, simulation, and analytic tools. This section will examine how KBE is revolutionising product design and development by transforming how things are developed, validated, and ready for manufacturing. Conceptual Design KBE provides design templates, rule-based reasoning, and simulation tools to aid in the conceptualisation stage. Under established guidelines and limitations, engineers may quickly investigate potential design solutions, assess their performance, and come to wise conclusions. Detailed Design KBE streamlines the creation of 3D CAD models, automates geometric and parametric modelling, and maintains adherence to design standards during the detailed design process. By taking into account various aspects, including material selection, manufacturability, and assembly requirements, it enables engineers to optimise designs. Simulation and Analysis Vision-based inspection systems can validate proper component alignment and positioning in intricate manufacturing lines. To ensure exact assembly and lower the possibility of defective or out- of-place items, they can compare acquired photos against predetermined templates. Early detection of faults allows producers to avoid problems later on and enhance overall product quality. Design Validation and Verification Engineers can validate designs using KBE systems against industry standards, regulatory requirements, and design specifications. KBE makes sure that products fulfil safety, quality, and performance standards before they move into production by automating compliance inspections. Challenges and Considerations Although vision-based inspection systems have several benefits for production, they are difficult to implement. To achieve successful integration and ideal results, these elements must be addressed. Let’s examine the difficulties and vital elements to consider while implementing vision-based manufacturing inspection. Overcoming Resistance to Automation Although there is no denying the advantages of vision-based inspection systems, some manufacturers could be reluctant to adopt automation due to worries about job loss and up-front expenditures. It is crucial to understand that automation does not always imply the replacement of human labour. Instead, it enables them to concentrate on higher-value duties like inspecting inspection data, streamlining processes, and enhancing quality. Furthermore, long-term cost savings and increased productivity can benefit more than the initial investment in vision-based inspection equipment. When weighing the deployment of these technologies, manufacturers should consider the return on investment (ROI) and potential competitive advantages. The Future of Manufacturing and Vision-Based Inspection Automation is the key to the success of manufacturing in the future, and vision-based inspection is leading this change. These systems will grow more potent, precise, and adaptable as technology develops. The effectiveness and capacities of vision-based inspection in manufacturing will be further improved by integration with other developing technologies, including robotics, the Internet of Things (IoT), and augmented reality. Vision-based inspection technologies will maintain product quality and reduce environmental impact as the industry prioritises sustainability and waste reduction. Manufacturers may reduce waste and help create a more sustainable manufacturing ecosystem by identifying problems early in production. Conclusion The manufacturing sector is changing because vision-based inspection systems offer precise, effective, and reasonably priced quality control solutions. By embracing automation, manufacturers can obtain greater precision, increased efficiency, and lower costs. By utilising AI and machine vision technologies, businesses can streamline processes, enhance product quality, and gain a competitive edge in the global market. Ready to revolutionise your manufacturing processes with the vision-based inspection? Contact Prescient today to unlock the power of automation, accuracy, and efficiency in quality control.

Read MoreRevolutionising Engineering Design: The Role of AI & Machine Learning in KBE

Table of content Introduction Understanding Knowledge-Based Engineering (KBE) AI and Machine Learning in KBE Benefits of AI in KBE Challenges and Considerations Conclusion Introduction One industry where artificial intelligence (AI) has made significant strides is engineering design. With the advancement of machine learning algorithms, AI is redefining how engineers approach design difficulties, leading to more practical and innovative solutions. In this article, we embark on an exciting voyage into knowledge-based engineering (KBE), where artificial intelligence (AI) and machine learning take centre stage. Get ready to see how the field of engineering design is being revolutionised. Prepare yourself as we examine how AI and machine learning algorithms are rewriting the rules, seamlessly automating tedious jobs, and accelerating creativity to unprecedented levels. So, lets us dive in to know more. Understanding Knowledge-Based Engineering (KBE) By using a knowledge-driven system to automate engineering design processes, knowledge-based engineering (KBE) is a methodology. Developing design alternatives, automating design processes, and supporting decision-making depends on capturing and applying expert knowledge. To enhance the design process and boost productivity, KBE systems combine design guidelines, technical expertise, and computer algorithms to enhance the design process and boost productivity. So, let us explore the cutting-edge world where engineering and technology meet to create new possibilities for design. AI and Machine Learning in KBE KBE systems depend heavily on artificial intelligence, especially machine learning, which enables them to learn from data, spot patterns, and make wise decisions. Here are a few ways that AI and machine learning, through KBE, are revolutionising engineering design: Large amounts of data can be analysed using machine learning algorithms to improve designs. AI can produce design choices and choose the best one based on predefined criteria by finding patterns and relationships in data. This saves time and resources by eliminating the need for manual trial and error. A generative design method uses AI algorithms with limitations and goals to generate many design possibilities. Machine learning models can examine existing designs, draw lessons from them, and produce fresh design concepts better suited to specific needs. This creates new opportunities and enables engineers to investigate novel concepts that may not have been considered. KBE can capture and use expert knowledge to automate design processes with AI-powered expert systems. These systems can replicate expert human decision-making and offer advice based on pre-established norms and criteria. Engineers can create more effectively and efficiently thanks to AI, which uses the pooled expertise of specialists. Machine learning algorithms can analyse large datasets to uncover helpful information that might not be visible to human designers. AI can uncover novel design concepts or optimisation techniques that can result in advancements in engineering design by spotting hidden patterns and connections. This improves originality and creativity during the design process. Benefits of AI in KBE Utilise Knowledge-Based Engineering (KBE)’s (amazing) AI capabilities to accelerate your engineering design process. Bid adieu to manual labour and welcome greater productivity, exactitude, cost savings, and a spurt of invention. Let’s explore the fascinating advantages that AI offers KBE: KBE provides design templates, rule-based reasoning, and simulation tools to aid in the conceptualisation stage. Under established guidelines and limitations, engineers may quickly investigate potential design solutions, assess their performance, and come to wise conclusions. AI aids in cost reduction during the design and production phases by optimising designs and decreasing the requirement for physical prototypes. Simulators and virtual testing with AI capabilities can detect possible problems early on, saving time and money. Engineers can explore various design options and push the limits of what is conventionally thought possible thanks to AI-powered generative design. This encourages creativity and creates new engineering design opportunities. Challenges and Considerations Although using AI in Knowledge-Based Engineering (KBE) has many advantages, there are also significant difficulties and factors to take into account. In order to achieve successful integration and ideal results, these elements must be addressed. The following are some major issues to think about: The decision-making process of AI models can sometimes be opaque, making it challenging to understand the underlying reasons behind their recommendations. This can be a concern, especially in safety-critical engineering applications. As AI becomes more prevalent in engineering design, ethical considerations such as fairness, accountability, and transparency must be addressed. Designers should be aware of potential biases and unintended consequences of AI-powered systems. AI should be seen as a tool to enhance human capabilities rather than replace human expertise. Collaborative approaches that combine human creativity and judgment with AI- driven automation can yield the best results. Conclusion Knowledge-Based Engineering (KBE) technologies based on artificial intelligence are revolutionising engineering design. Engineers may efficiently use expert knowledge, produce creative solutions, and validate and optimise designs using AI. Incorporating AI in KBE provides enhanced efficiency, accuracy, cost savings, innovation, and continual learning. But dealing with issues like data quality, interpretability, ethical concerns, and successful human-machine collaboration is crucial. AI will become increasingly important in determining how engineering design will be done as it develops, allowing engineers to work more effectively, creatively, and successfully. Are you ready to revolutionise your engineering design process? Harness the power of AI and experience the benefits of Knowledge-Based Engineering (KBE) with Prescient. Contact us today to explore how AI-powered solutions can optimise your designs, improve efficiency, and unlock new levels of innovation. Let’s shape the future together!

Read MoreKBE and Simulation: Enhancing Product Design

Table of Content Introduction Enhancing Product Design with KBE and Simulation The Power of Knowledge-Based Engineering The Role of Simulation in Product Design Synergistic Effect: KBE and Simulation Case Study: KBE and Simulation in Automotive Design Conclusion Introduction Any business’ success depends greatly on the quality of its products. Enterprises increasingly rely on cutting-edge technology like Knowledge-Based Engineering (KBE) and simulation to develop innovative and effective products. KBE provides the virtual testing and validation of product designs, whereas simulation facilitates the integration of knowledge and rules into design processes. This article examines how enhancing efficiency, lowering costs, and raising overall product quality are revolutionising product design with KBE and simulation. Enhancing Product Design with KBE and Simulation Companies work hard to develop creative and effective products to remain competitive in today’s quickly changing business environment. Learn how simulation and other cutting-edge technologies are changing how products are designed. Examine how KBE and simulation may transform processes to increase productivity, lower costs, and produce goods of higher overall quality. Let’s explore the amazing ways that these technologies are revolutionising the process of product design. The Power of Knowledge-Based Engineering A design methodology known as knowledge-based engineering (KBE) uses rules and technical information that have been gathered to automate and improve the product design process. It enables quicker and more effective design iterations by allowing engineers to integrate their knowledge into software platforms. Here are a few KBE advantages: Automation and Efficiency KBE facilitates the reuse of preexisting design knowledge and automates repetitive design procedures. Due to the substantial reduction in design time, engineers may now concentrate on difficult problems rather than tedious ones. Design Rule Checking Engineers may ensure that designs adhere to industry standards, laws, and best practices by integrating design rules into KBE systems. This reduces the possibility of expensive design mistakes while also eliminating human error. Design Optimization KBE systems can investigate several design options and automatically evaluate them following specified standards. This aids engineers in finding the best design option that satisfies the required performance standards. Knowledge Capture and Retention The entire design team can access the implicit expertise of seasoned engineers thanks to KBE. This guarantees knowledge continuity and allows less experienced designers to use their colleagues’ experience. The Role of Simulation in Product Design The technique of simulating a product or system to study how it would behave under various circumstances. Without physical prototypes, it helps engineers forecast and comprehend how a product will function in the real world. As an example of how simulation improves product design: Iterative Design Using simulation, engineers may swiftly evaluate and improve their design concepts in a virtual environment. Before committing to physical prototyping, they can simulate various scenarios, make design improvements, and assess the effects of those changes. The iterative design method cutscosts and saves time. Performance Evaluation Simulation offers useful information about a product’s performance, including its structural soundness, fluid dynamics, thermal behaviour, and electromagnetic properties. Engineers can spot flaws and make adjustments early in the design process, leading to more durable and dependable products. Risk Mitigation Engineers may pinpoint possible dangers and failure modes in a safe setting through simulation. To ensure the product can resist difficulties encountered in the actual world, it might mimic harsh conditions, stress tests, and failure scenarios. Cost Reduction Using simulation, companies can dramatically lower the expenses of physical prototyping and testing. Simulations are more time and money efficient since there is no need to create numerous prototypes or conduct rigorous testing. Synergistic Effect: KBE and Simulation Combining KBE and simulation creates a powerful synergy that enhances the product design process. Here’s how these technologies work together: Automated Design Space Exploration A variety of design possibilities can be produced by KBE systems and further assessed through simulation. The KBE system creates design possibilities for simulation analysis once engineers define the parameters and limitations. This makes it possible to quickly explore the design space and find the best solutions. Rapid Design Iterations Using simulation, companies can dramatically lower the expenses of physical prototyping and testing. Simulations are more time and money efficient since there is no need to create numerous prototypes or conduct rigorous testing. Virtual Testing and Validation Before a thing is manufactured, engineers can theoretically test and validate it using simulation. Engineers can spot possible problems or opportunities for improvement by running the virtual model through various tests and simulations, such as structural analysis, fluid dynamics, or electromagnetic simulations. This lessens the requirement for physical prototypes and lowers the possibility of expensive design mistakes. Design Optimisation and Performance Evaluation Design optimisation and performance assessment are made possible using KBE and simulation. KBE systems can automatically generate design alternatives based on predefined criteria, and their performance can then be evaluated through simulation. Engineers can choose the most effective and dependable design solution by analysing the simulation data. This collaborative process improves the overall quality of the product design and enables data-driven decision making. Case Study: KBE and Simulation in Automotive Design Let’s look at how KBE and simulation are used in the automotive sector to demonstrate their value in product design. KBE systems can automate the creation of design alternatives for various vehicle components, such as engine parts, chassis, or aerodynamic elements, in the field of automotive design. These designs can then be simulated to assess elements like structural integrity, crashworthiness, and aerodynamic performance. Engineers can pinpoint the best design approaches that abide by safety standards, maximise fuel economy, and improve overall vehicle performance. Time and resources are saved by comparing this integrated strategy to conventional trial-and-error procedures. Conclusion By increasing effectiveness, lowering costs, and raising overall product quality, simulation and knowledge-based engineering (KBE) are revolutionizing product design. KBE facilitates design rule validation and optimization while automating design processes and capturing engineering knowledge. Contrarily, simulation enables engineers to virtually test and evaluate product designs, spot flaws, and reduce risks. Combining KBE and simulation makes rapid design iterations, virtual testing, and design optimisation possible. This integrated strategy speeds up the design process, lowers costs, and produces cutting- edge and dependable products. The use of KBE and simulation in product design is anticipated

Read MoreKBE and the Future of Engineering: Predictions and Trends for the Coming Years

Table of Content Introduction The Future of Engineering and KBE Future Trends and Predictions Navigating the Challenges: Potential Threats in the Realm of KBE Conclusion Introduction Consider a world where engineering is at the top of its game, designs are brilliantly optimised, and the potential for creation is limitless. It is the world of Knowledge-Based Engineering (KBE), where cutting-edge technologies like automation and artificial intelligence (AI) are integrated to transform the design process. KBE is like having a knowledgeable engineering assistant who can analyse choices, crunch numbers, and develop solutions that are optimised beyond your wildest expectations. It’s a game-changer that promotes innovation, saves time, and eliminates ambiguity. This article examines the intriguing possibilities and anticipated changes that the engineering industry will see in the future years. The Future of Engineering and KBE Utilising KBE, you can work fluidly with cross-disciplinary teams, explore novel design approaches, and take advantage of AI-driven optimisation. Pushing boundaries and achieving engineering excellence is more important than just efficiency and cost reductions. So fasten your seatbelts and prepare for an exciting adventure as KBE takes engineering into unknown territory and brings the extraordinary to life. It is crucial to investigate the Predictions and Trends that will influence KBE and engineering as a whole as we enter a new era of technological developments. Future Trends and Predictions Engineering is about to undergo a revolution, and design and innovation will be redefined by knowledge-based engineering (KBE). Let’s explore the fascinating trends and forecasts that will influence the KBE environment in the upcoming years as we look to the future. AI-Driven Design Optimisation The broad implementation of AI-driven design optimisation is one of the key themes for the future of KBE. Engineers can use AI algorithms as they get more sophisticated and potent to optimise designs based on predefined goals and limits automatically. Artificial intelligence (AI) can swiftly and effectively identify the best solutions by analysing enormous volumes of data and simulating countless design iterations. The result is extremely effective and cost-effective designs, which save time and pave the way for increased production and creativity. Generative Design for Unconventional Solutions Another interesting development in KBE is generative design, which enables engineers to investigate novel design approaches that weren’t previously thought of. AI algorithms can be programmed with design limitations and requirements to produce several design options that meet those requirements. Engineers can now explore previously unimaginable possibilities and push the bounds of what is considered practical. In KBE’s future, generative design will significantly foster innovation and empower engineers to produce truly ground-breaking innovations. Integration of IoT and Sensor Data The Internet of Things (IoT) and sensor technologies will be crucial in the future of KBE. Engineers will have access to real-time data from numerous sources with the rise in linked devices and sensors. This information can be used to track how designs work in actual use, spot possible problems, and make data-driven decisions for improvement. The accuracy and dependability of engineering designs will be improved by integrating IoT and sensor data into KBE systems, resulting in more effective and durable solutions. Augmented Reality (AR) and Virtual Reality (VR) in DesignVisualisation The way engineers visualise and interact with designs is predicted to change as a result of the quick advancement of AR and VR technologies. Engineers will soon be able to inspect and evaluate strategies by immersing themselves in virtual surroundings, and this will let them ore efficiently spot any potential design problems or enhancements. Due to the ability of engineers located in different locations to collaborate virtually in a single design environment, AR and VR will also make it easier for interdisciplinary teams to collaborate and communicate. This trend will speed up decision-making, improve overall design quality, and improve the design review process. Continued Integration of Expert Systems In KBE, expert systems that AI and machine learning power will continue to be extremely important. These systems gather and make use of expert information to automate intricate design procedures, imitate human judgement, and make wise recommendations. Even more advanced expert systems that can handle difficult design problems will be included in KBE in the future, effectively enhancing the knowledge of human engineers. This will result in fewer manual processes being used and more rapid and precise design iterations, increasing engineering productivity. Emphasis on Sustainable and Eco-friendly Designs The future of KBE will see a greater emphasis on sustainable and eco-friendly designs as environmental concerns gain popularity. AI algorithms will be used to optimise designs for resource utilisation, environmental effect, and energy efficiency. Engineers may create more environmentally sensitive solutions and contribute to a greener future by taking sustainability considerations into account early in the design process. Engineers canuse AI’s capabilities with KBE to achieve sustainable design objectives while guaranteeing the best possible performance and functionality. Navigating the Challenges: Potential Threats in the Realm of KBE Knowledge-Based Engineering (KBE) is a field where technology opens doors to countless possibilities. Still, there are also hidden dangers that we need to deal with. Like any strong tool, automation and AI in KBE have their own set of difficulties. We must cross the perilous waters of data quality to provide accurate and representative data to our AI models. In an effort to achieve transparency and interpretability, we must unlock the mysterious black box of AI decision-making. Fairness, responsibility, and a strong awareness of unforeseen repercussions are demands imposed by looming ethical considerations. Despite these difficulties, we must not lose sight of what engineering is all about—the value only human knowledge can provide. We must be cautious, combining artificial intelligence’s strength with human judgement’s wisdom and encouraging cooperation and machine synergy. By accepting these difficulties head-on, we can unleash KBE’s full potential and guide it towards a future where engineering expands to new heights, and creativity flourishes. Conclusion As we conclude this journey through the realm of Knowledge-Based Engineering (KBE), one thing becomes clear: the future of engineering is brimming with potential. However, we must also acknowledge the challenges of this technological advancement. By embracing these challenges, we can unlock the true power of KBE and shape a future where innovation knows no bounds. Join us at Prescient, where we stand

Read MoreIntroduction to Knowledge-Based Engineering (KBE) – Understanding the Concept and Benefits

Table of content Introduction What is Knowledge Based Engineering? The Knowledge Based Engineering Process Benefits of Knowledge Based Engineering Conclusion Introduction The world of technology is rapidly evolving, with the latest developments occurring constantly. From automation to preference-based customisation, multiple upgrades in the field of engineering are helping companies deliver better and more efficient products to customers. One such development is the increasing use of Knowledge Based Engineering methodology. In the simplest terms, Knowledge Based Engineering is referred to the combination of artificial intelligence (AI), object-oriented programming, and CAD automation (computer-aided design). In this article, we have curated the complete details for you to understand the concept and benefits of KBE. What is Knowledge Based Engineering? Knowledge Based Engineering (KBE) is the latest engineering methodology that uses a computer-based system to gather, analyse, and use engineering knowledge to design and develop new products. This methodology has revolutionised the engineering industry by offering a more efficient and effective way of creating products. Based on scientific methods and proven technology, this allows automation and customisation of the product design to speed up the process and give better and more efficient results. It eliminates the need for repetitive design work by using design automation, allowing engineers to focus on more complex and creative aspects of the design process. Typically, KBE systems have two components: a knowledge base and a reasoning engine. The knowledge base includes the laws, knowledge figures, and models defining the engineering problem’s domain, and the reasoning engine uses this information to provide conclusions and solutions. KBE has been applied in various industries, including aerospace, automotive, defence, and healthcare. KBE systems include a user interface, a choice of inference rules, and a knowledge base. Data on product design, technical concepts, and production procedures are stored in the knowledge base. The Knowledge Based Engineering Process Often used in place of CAD customisation or CAD automation, the KBE process is entirely based on knowledge acquisition and use. The process involves the steps as follows: 1.Knowledge acquisition Collect knowledge from subject matter experts, books, journals, and other sources, then organise it so a computer can process it. 2.Knowledge modelling Use a knowledge representation language, such as rule-based systems or semantic networks, to model the learned information and determine the connections between the data and the rules that describe the engineering domain. 3.Knowledge integration Include the modelled knowledge in a KBE system with a knowledge base and reasoning engine, and store the knowledge there systematically. 4.Knowledge validation Test the KBE system against well-known solutions to validate it and ensure it generates accurate and trustworthy results. 5.KBE system deployment Install the KBE system that has been validated and instruct engineers on how to use it. Improve efficiency and quality by integrating the system into current engineering procedures. 6.KBE system maintenance Maintaining the KBE system on an ongoing basis will ensure its effectiveness. This will involve tracking its operation, updating the knowledge base and reasoning engine, and considering user feedback. Knowing the benefits of Knowledge-Based Engineering is equally important when you want to implement it to gain extended-duration benefits. Benefits of Knowledge Based Engineering Knowledge-Based Engineering system has multiple benefits, which are discussed below: Increased efficiency KBE automates time-consuming, repetitive procedures, cutting the time and effort needed to complete engineering jobs. Improved quality Improved qualityKBE offers an organised method for engineering, which reduces errors and raises the calibre of the finished product. Reduced costs KBE can aid in lowering the cost of engineering activities by automating procedures and enhancing quality. Enhanced collaboration KBE makes it easier for engineering teams to collaborate and communicate, allowing them to exchange best practices and information. Faster product development By automating design and analysis tasks and shortening the prototyping process, KBE helps quicken product development cycles. Increased innovation KBE may promote innovation by enabling engineers to explore more innovative solutions and giving them access to a wider variety of design and analysis tools. Improved decision-making KBE can help decision-making by giving engineers access to more precise and trustworthy data and analysis results. Better documentation By automatically gathering and arranging engineering knowledge and data, KBE can help enhance documentation by lowering the possibility of data loss or improper management. Improved customer satisfaction KBE can increase customer satisfaction by speeding up product development, cutting costs, and raising product standards. Conclusion Knowledge Based Engineering (KBE) is a mentality emphasising the value of knowledge and experience in engineering, not merely a technology or tool. KBE helps engineers be more productive, inventive, and successful while reducing the risk of mistakes and delays by fusing human knowledge with artificial intelligence. We at PreScient are dedicated to assisting businesses of all sizes and sectors in realising the full potential of KBE since it is the future of engineering. Whether you’re just getting started with KBE or hoping to advance your skills, our team of professionals can offer the advice, resources, and assistance you require to succeed. To find out more, call us right away.

Read MoreHow Inspection And Validation Can Improve The Reliability Of Additive Manufacturing Processes

Table of content What Is Meant by Inspection and Validation? 4 Steps to improve Inspection and Validation How Can You Implement Inspection and Validation in Your Additive Manufacturing Process? Conclusion Do you want to make the processes you use for additive manufacturing more reliable? If so, you’ve come to the right place. This article will talk about how you can make your 3D printing more reliable by using inspection and validation.The additive manufacturing method, which is sometimes called “3D printing,” is a business that is growing quickly and changing the way we make things. 3D printing is a popular way to make prototypes and custom parts because it can make complicated shapes and patterns. Still, as with any manufacturing process, it’s essential to make sure that the final product meets quality and reliability standards. At this point, there needs to be an inspection and validation. What Is Meant by Inspection and Validation? During the inspection, the printed item is looked at to make sure it meets certain standards, such as size, finish, and material quality. Validation, on the other hand, is the process of testing the part to make sure it works as expected under different conditions, like stress or heat.Additive manufacturing might be more reliable if there were better ways to inspect and test products. 4 Steps to improve Inspection and Validation 1. Catching Errors Early Inspection is a key part of the process of additive manufacturing because it lets you find mistakes early on. This is important because it could save you money and time in the long run. For instance, if a part is printed in the wrong size, it may be caught early and fixed so that the whole part doesn’t have to be made again or thrown away. If you catch problems early, you may save money and improve the overall quality of your printed parts. 2. Ensuring consistent quality Checking and validating processes make sure that the quality of your printed parts is always good. This is very important if you want to make consistent products that meet your customers’ needs. By regularly testing and certifying your parts, you can find any quality problems and fix them before they get worse. This could make your customers happy and improve the quality of your goods as a whole. 3. Identifying areas for improvement By looking at and testing your additive manufacturing process, you may find ways to make it better. For example, if parts keep failing a certain validation test, you can find out why and make any changes to the printing process that are needed. This could help you improve the performance and dependability of your printed parts, making your customers happier and making your goods work better overall. 4. Increasing Confidence in the Final Product If you use inspection and validation techniques, you might be able to trust the end results more. This is very crucial in fields like medicine and aviation, where dependability is significant. Testing and validation make sure that your products meet the right standards and specifications and work as expected in different situations. This could help you meet client needs, get industry certifications, and build a stronger reputation as a reliable provider. How Can You Implement Inspection and Validation in Your Additive Manufacturing Process? 1. Develop a Quality Control Plan For your additive manufacturing process to work, you need a quality control plan that includes inspection and validation steps. This plan should say what will be checked and how validation tests will be done. The strategy should also say how much error is okay for each of the specs that are being tested. A good plan for quality control can help make sure that everyone who works on the part knows what it is supposed to do. 2. Invest in the Right Equipment For inspection and validation procedures to work, you need to buy the right tools. Measurement tools, test equipment, and software are all examples of this type of equipment. You should choose the equipment based on how your production process works and how precise it needs to be. For example, you might need to buy a coordinate measurement machine (CMM) if you need to figure out the size of something. 3. Train Your Team It’s necessary to show your staff how to use the equipment and go through the same inspection and validation steps every time. During this training, the contents of the quality control plan, the tools and equipment that are used, and the right way to do inspections and validation tests should all be talked about. The training should also cover what to do if a part doesn’t meet the requirements, such as writing down the problem and fixing it. Conclusion Inspection and validation are two important steps that help make additive manufacturing more reliable. You can make 3D printing more reliable by catching mistakes early, making sure the quality is always the same, looking for ways to improve, and having more faith in the end result. Do you want to use additive manufacturing more effectively? Prescient Technologies can assist you! Our software development services could completely change how you use 3D printing and other methods of “additive manufacturing.”So, make a plan for quality control, get the right tools, and train your staff. Then, you’ll be on your way to better 3D-printed products. References: TUVSUD ESCIES Additive Manufacturing Internet of Business

Read MoreDesigning For Additive Manufacturing: Best Practices For Successful Product Development

Table of content Top 8 Best Practices For Successful Product Development Conclusion Top 8 Best Practices For Successful Product Development 1. Start with a thorough understanding of the technology Additive manufacturing has changed how complex parts are made, making it possible to make structures that were once impossible to make. But if you want to make good designs for 3D printing, you need to know how the technology works.For example, you need to know about the build volume, resolution and precision of each 3D printing technology and choose the right materials for your design. Also, you need to think about how the parts will be positioned and held up during the printing process. By thinking about these things, you can make designs that work well with the technology and use all of its features. 2. Optimise parts for the build process Putting together a 3D printer might come with its challenges, which you should think about. For example, you have to think about how the pieces are set up on the build plate to make sure they are supported well when printing. 3. Reduce material usage 3D printing is better than traditional ways of making things in a lot of ways, like being able to use less material. By designing parts to use less material, you can make products that are more environmentally friendly and make less waste. One way to do this is to build with lattices, which are strong but use less material. Putting these structures into designs can help make parts that are light but strong, useful, and long-lasting. 4. Consider post-processing requirements When a 3D print is finished, it may need to be cleaned or finished. It is called “post-processing”. Keep these needs in mind when you’re designing, since they could make the production process take longer and cost more. By making parts that are easy to clean and polish, you can save time and money during post-processing. Also, if components are designed with as few support structures as possible, they may need less post-processing. 5. Optimise for material properties When designing parts for 3D printing, you need to think about how the materials work. During the printing process, for example, some materials may be more likely to bend or change shape, while others may be more likely to break or crack. If the designs work well with the properties of the chosen material, the parts will be strong and useful. 6. Use design software that supports 3D printing A key part of the DFAM process is the use of design software. If you use software made just for 3D printing, you can make designs that work best with the technology. These software tools can help you find problems, like places that might need more support, and give you ideas on how to fix them. 7. Incorporate tolerances Tolerances are the allowed differences from a given dimension that designers must take into account when making things for additive manufacturing. Since the accuracy and precision of 3D printing processes vary, planning with tolerance in mind may help avoid problems like pieces that are too tight or too loose. Tolerances in the design can also help make sure that the pieces fit together right and work as planned. 8. Collaborate with experienced 3D printing partners Working with people who have already used 3D printing can help you make your designs better. These partners can tell you important things about what you can and can’t do with 3D printing technology. They can also show you ways to make your printing more effective and efficient. 9. Test and lterate Testing and iterating are important parts of making a good product, and they are even more important when designing for additive manufacturing. Because 3D printing technologies are always changing, it’s important to keep up with the latest changes and to keep testing and improving designs. With 3D printing, prototypes can be changed and tested quickly, so design flaws can be found and fixed quickly before the product goes into production. Conclusion To design for additive manufacturing, you have to change the way you think about things. When designing for efficient and effective production, 3D printing’s unique strengths and weaknesses must be taken into account. By following the best practices in this article, you can make sure that their parts are good for 3D printing. This will lead to faster production, less material waste and more environmentally friendly products.If you want to elevate your additive manufacturing processes and stay ahead in the competitive market, Prescient Technologies can be your go-to partner. With our state-of-the-art software development services, we can help you get revolutionary results and improve how you use 3D printing and other “additive manufacturing” techniques.Get in touch with us today and take your product development to the next level. References: Alpha Bigrep Radius

Read More