EMOTION AI – A BOON FOR THE FUTURE!

- Home

- Blog Details

- September 3 2021

- admin

By Pruthviraj Jadhav

Abstract

Artificial Intelligence is the talk of the tech town. The capabilities that AI can exhibit are breaking all sorts of boundaries. There are intelligent AI projects that can create a realistic image, and then there are ones that bring images to life. Some can mimic voices. The surveillance-based AI can predict the possible turn of events at a working space and even analyze the employees based on their recorded footage. (To learn more about smart surveillance, visit www.inetra.ai)

This blog talks about a generation of AI that can identify human behavior and are special ones.

We are talking about the Expressions Social and Emotion AI, a recent inductee in the computing literature. The Emotion AI incorporates the AI domains adept in automatic analysis and synthesis of human behavior, primarily focused on human-human and human-machine interactions.

A report on “opportunities and implications of AI” by the UK Government Office for Science states, “tasks that are difficult to automate will require social intelligence.”

The Oxford Martin Program on the Impacts of Future Technology states, “the next wave of computerization will work on overcoming the engineering bottlenecks pertaining to creative and social intelligence”

What is Emotion AI?

Detection and evaluation of human emotions with the help of artificial intelligence from sources like video (facial movements, physiological signals), audio (voice emotion AI), text (natural language and sentiments) is Emotion AI.

While humans can understand and read emotions more readily than machines, machines can quickly analyze large amounts of data and recognize its relation to stress or anger from voice. Machines can learn from the finite details on human faces that occur too quickly to understand.

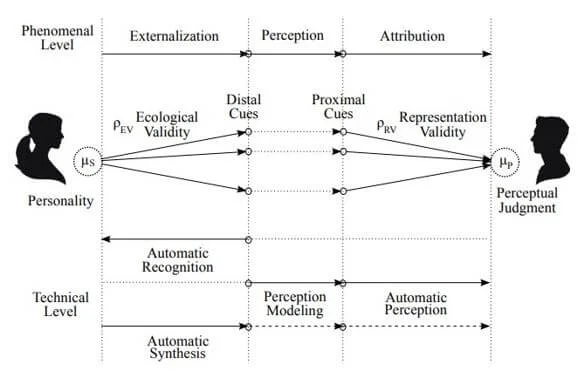

The Brunswick Lens Model

Let’s have a look at Fig. 1 shown below. The person on the left is characterized by an inner state µS that is externalized through observable distal cues. The person on the right perceives these as proximal cues; stimulate the attribution of an inner state µP (the perceptual judgment) to the person on the left.

From a technological perspective, the following actions are possible –

- The recognition of the inner state (mapping distal cues into the inner state).

- The perception (the actual decision made by the decision-maker).

- The synthesis (the optimal or correct decision which should have been made in that situation)

The Brunswick Lens model is used to compute the human-human and human-machine interactions and their emotional aspects. It is a conceptual model with two states − the inner and outer state. The outer state is easily visible for the observer but not much conclusive. The inner state is not easily understandable but leaves some physical traits (behavior, language, and physiological changes) used to perceive the inner state (not always the correct one).

For example, a happy person might shed tears of joy, but another person will consider the former in grief.?

These physical traits can be converted into data suitable for computer processing and thus, find their place in AI. In addition to the above, the Brunswik Lens covers another aspect of Emotion AI: the capability to synthesize observable traits that activate the same attribution processes that occur when a human’s traits are displayed when perceived by a human observer.

For example, suppose an artificial face displays a fake smile. In that case, humans tend to believe that the machine is happy, even though emotional expression is impossible with artificial entities since they cannot experience it.

However, people can understand the difference between humans and machines at a higher level but not at a deeper level where some processes occur outside their consciousness. In other words, a human’s reaction to machines is like how they react to other humans. Therefore, human-human interaction is a prime source of investigation for the development of human-computer interaction.

How does Emotion AI work?

Emotion AI isn’t limited to voice. It uses the following analysis –

- Sentiment analysis is used to measure and detect the emotional data of text samples (small fragments or large samples). It is a natural language processing method & can be used in marketing, product review analysis, recommendation, finance, etc.

- Video signals – It includes facial expression analysis.

- Gait analysis and gleaning – Certain physiological signals through video are analyzed to learn about heart rate and respiration without any contact using cameras under ideal conditions.

Social Media giant ‘Facebook’ introduced the reactions feature to gain insights and data regarding user’s responses to various images.

Emotion AI needs user-generated data such as videos or phone calls to evaluate & compare reactions to certain stimuli. Later, such large quantities of data can be morphed into human Emotion and behavioral recognizing patterns using machine learning. It can leverage more in detail emotional reactions users have with the help of the high computational capability of machines.

Oliver API

Oliver is an Application Programming Interface, also known as Oliver API, a set of programming frameworks to introduce Emotion AI in computer applications. Oliver API permits real-time and batch audio processing and has a wide array of various emotional and behavioral metrics. It can support large applications and comes with easy documentation. SDK is supported in various languages (javascript, python, java) and examples to help programmers understand its operation quickly.

The Oliver API Emotion AI can evaluate different modalities through which humans express emotions, such as voice tone, choice of words, engagement, accent. This data can be processed to produce responses and reactions to mimic empathy. The sole aim of Emotion AI is to provide users a human-like interaction.

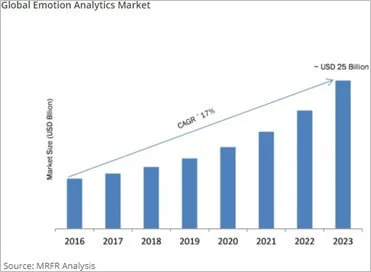

Industry predictions –

- Global Emotion AI: According to ‘Tactic,’ the global Emotion AI market will grow from USD 123M in 2017 to USD 3,800M in 2025.

- Social Robotics: The revenues of the worldwide robotics industry were USD 28.3 billion in 2015 and are expected to reach USD 151.7 billion in 2022.

- Conversational Agents: The global market for Virtual Agents (including products like Amazon Alexa, Apple Siri, or Microsoft Cortana) will reach USD 3.6 billion by 2022.

- Global chatbot market: Valued at around USD 369.79 million in 2017 – is expected to reach approximately USD 2.16 billion in 2024.

Applications –

Medical diagnosis – In certain diseases which need an understanding of emotions like depression and dementia, voice analysis software can be beneficial.

Education – Emotion AI-adapted education software with capabilities to understand a kid’s emotions and frustration levels will help change the complexity of tasks accordingly.

Employee safety – Since employee safety solutions and their demands are on the rise, Emotion AI can aid in analyzing stress and anxiety levels.

Health care – Emotion AI-enabled bot will help remind older patients about their medications and monitor their everyday well-being.

Car safety – With the help of computer vision, the driver’s emotional state can be analyzed to generate alerts for safety and protection.

The autonomous car, fraud detection, retail marketing, and many more.

Conclusion –

Emotions are a giveaway of who we are at any given moment. It impacts all facets of our intelligence and behavior at the individual and group levels. Emotion AI helps in understanding people and offers a new perspective to redefine traditional processes and products. In the coming future, it will boost up businesses and be a beneficial tool in medical, automobile, safety, and marketing domains. Thus, decoding emotions – the fundamental quality that makes us human and re-coding it to machines will be a boon to our future generation.

References –

- https://www.aitrends.com/category/emotion-recognition/page/2/

- Perepelkina O., Vinciarelli A. (2019), Social and Emotion AI: The Potential for Industry Impact, IEEE 8th International conference on ACIIW, Cambridge, United Kingdom.

- https://oliver.readme.io

- https://www.acrwebsite.org/volumes/6224/volumes/v11/NA-11

- https://mitsloan.mit.edu/ideas-made-to-matter/emotion-ai-explained

- https://dmexco.com/stories/emotion-ai-the-artificial-emotional-intelligence

- Brunswik E. (1956), Perception and the representative design of psychological experiments, University of California Press

- https://www.marketresearchfuture.com/reports/emotion-analytics-market-5330