Optimization Problems and Techniques

Table of content Optimization Problems Linear and Quadratic programming Types of Optimization Techniques When discussing the mathematics and computer science stream, optimization problems refer to finding the most appropriate solution out of all feasible solutions. The optimization problem can be defined as a computational situation where the objective is to find the best of all possible solutions. Using optimization to solve design problems provides unique insights into situations. The model can compare the current design to the best possible and includes information about limitations and implied costs of arbitrary rules and policy decisions. A well-designed optimization model can also aid in what-if analysis, revealing where improvements can be made or where trade-offs may need to be made. The application of optimization to engineering problems spans multiple disciplines. Optimization is divided into different categories. The first is a statistical technique, while the second is a probabilistic method. A mathematical algorithm is used to evaluate a set of data models and choose the best solution. The problem domain is specified by constraints, such as the range of possible values for a function. A function evaluation must be performed to find the optimum solution. Optimal solutions will have a minimal error, so the minimum error is zero. Optimization Problems There are different types of optimization problems. A few simple ones do not require formal optimization, such as problems with apparent answers or with no decision variables. But in most cases, a mathematical solution is necessary, and the goal is to achieve optimal results. Most problems require some form of optimization. The objective is to reduce a problem’s cost and minimize the risk. It can also be multi-objective and involve several decisions. There are three main elements to solve an optimization problem: an objective, variables, and constraints. Each variable can have different values, and the aim is to find the optimal value for each one. The purpose is the desired result or goal of the problem. Let us walk through the various optimization problem depending upon varying elements. Continuous Optimization versus Discrete Optimization Models with discrete variables are discrete optimization problems, while models with continuous variables are continuous optimization problems. Constant optimization problems are easier to solve than discrete optimization problems. A discrete optimization problem aims to look for an object such as an integer, permutation, or graph from a countable set. However, with improvements in algorithms coupled with advancements in computing technology, there has been an increase in the size and complexity of discrete optimization problems that can be solved efficiently. It is to note that Continuous optimization algorithms are essential in discrete optimization because many discrete optimization algorithms generate a series of continuous sub-problems. Unconstrained Optimization versus Constrained Optimization An essential distinction between optimization problems is when problems have constraints on the variables and problems in which there are constraints on the variables. Unconstrained optimization problems arise primarily in many practical applications and the reformulation of constrained optimization problems. Constrained optimization problems appear in applications with explicit constraints on the variables. Constrained optimization problems are further divided according to the nature of the limitations, such as linear, nonlinear, convex, and functional smoothness, such as differentiable or non-differentiable. None, One, or Many Objectives Although most optimization problems have a single objective function, there have been peculiar cases when optimization problems have either — no objective function or multiple objective functions. Multi-objective optimization problems arise in engineering, economics, and logistics streams. Often, problems with multiple objectives are reformulated as single-objective problems. Deterministic Optimization versus Stochastic Optimization Deterministic optimization is where the data for the given problem is known accurately. But sometimes, the data cannot be known precisely for various reasons. A simple measurement error can be a reason for that. Another reason is that some data describe information about the future, hence cannot be known with certainty. In optimization under uncertainty, it is called stochastic optimization when the uncertainty is incorporated into the model. Optimization problems are classified into two types: Linear Programming: In linear programming (LP) problems, the objective and all of the constraints are linear functions of the decision variables. As all linear functions are convex, solving linear programming problems is innately easier than non- linear problems. Quadratic Programming: In the quadratic programming (QP) problem, the objective is a quadratic function of the decision variables, and the constraints are all linear functions of the variables. A widely used Quadratic Programming problem is the Markowitz mean-variance portfolio optimization problem. The objective is the portfolio variance, and the linear constraints dictate a lower bound for portfolio return. Linear and Quadratic programming We all abide by optimization since it is a way of life. We all want to make the most of our available time and make it productive. Optimization finds its use from time usage to solving supply chain problems. Previously we have learned that optimization refers to finding the best possible solutions out of all feasible solutions. Optimization can be further divided into Linear programming and Quadratic programming. Let us take a walkthrough. Linear Programming Linear programming is a simple technique to find the best outcome or optimum points from complex relationships depicted through linear relationships. The actual relationships could be much more complicated, but they can be simplified into linear relationships.Linear programming is a widely used in optimization for several reasons, which can be: Quadratic Programming Quadratic programming is the method of solving a particular optimization problem, where it optimizes (minimizes or maximizes) a quadratic objective function subject to one or more linear constraints. Sometimes, quadratic programming can be referred to as nonlinear programming. The objective function in QP may carry bilinear or up to second-order polynomial terms. The constraints are usually linear and can be both equalities and inequalities. Quadratic Programming is widely used in optimization. Reasons being: Types of Optimization Techniques There are many types of mathematical and computational optimization techniques. An essential step in the optimization technique is to categorize the optimization model since the algorithms used for solving optimization problems are customized as per the nature of the problem. Integer programming, for example, is a form of mathematical programming. This technique can be traced back to Archimedes, who first described the problem of determining the composition of a herd of cattle. Advances in computational codes and theoretical research

Read MoreWhat is Digital Image Processing

Table of content Types of Image Processing Digital Image Processing and how it operates Uses of Digital Image Processing Previously we have learned what visual inspection is and how it helps in inspection checks and quality assurance of manufactured products. The task of vision-based inspection implements a specific technological aspect with the name of Digital Image Processing. Before getting into what it is, we need to understand the essential term Image Processing. Image processing is a technique to carry out a particular set of actions on an image to obtain an enhanced image or extract some valuable information. It is a sort of signal processing where the input is an image, and the output may be an improved image or characteristics/features associated with the same. The inputs to this process are either a photograph or video screenshot and these images are received as two-dimensional signals. Image processing involves three steps:Image acquisition: Acquisition can be made via image capturing tools like an optical scanner or with digital photos. Image enhancement: Once the image is acquired, it must be processed. Image enhancement includes cropping, enhancing, restoring, and removing glare or other elements. For example, image enhancement reduces signal distortion and clarifies fuzzy or poor-quality images. Image extraction: Extraction involves extracting individual image components, thus, producing a result where the output can be an altered image. The process is necessary when an image has a specific shape and requires a description or representation. The image is partitioned into separate areas and labeled with relevant information. It can also create a report based on the image analysis. Basic principles of image processing begin with the observation that electromagnetic waves are oriented in a horizontal plane. A single light pixel can be converted into a single image by combining those pixels. These pixels represent different regions of the image. This information helps the computer detect objects and determine the appropriate resolution. Some of the applications of image processing include video processing. Because videos are composed of a sequence of separate images, motion detection is a vital video processing component. Image processing is essential in many fields, from photography to satellite photographs. This technology improves subjective image quality and aims to make subsequent image recognition and analysis easier. Depending on the application, image processing can change image resolutions and aspect ratios and remove artifacts from a picture. Over the years, image processing has become one of the most rapidly growing technologies within engineering and even the computer science sector. Types of Image Processing Image processing includes the two types of methods:Analogue Image Processing: Generally, analogue image processing is used for hard copies like photographs and printouts. Image analysts use various facets of interpretation while using these visual techniques. Digital image processing: Digital image processing methods help in manipulating and analyzing digital images. In addition to improving and encoding images, digital image processing allows users to extract useful information and save them in various formats. This article primarily discusses digital image processing techniques and various phases. Digital Image Processing and how it operates Digital image processing requires computers to convert images into digital form using the digital conversion method and then process it. It is about subjecting various numerical depictions of images to a series of operations to obtain the desired result. This may include image compression, digital enhancement, or automated classification of targets. Digital images are comprised of pixels, which have discrete numeric representations of intensity. They are fed into the image processing system using spatial coordinates. They must be stored in a format compatible with digital computers to use digital images. The primary advantages of Digital Image Processing methods lie in their versatility, repeatability, and the preservation of original data. Unlike traditional analog cameras, digital cameras do not have pixels in the same color. The computer can recognize the differences between the colors by looking at their hue, saturation, and brightness. It then processes that data using a process called grayscaling. In a nutshell, grayscaling turn RGB pixels into one value. As a result, the amount of data in a pixel decreases, and the image becomes more compressed and easier to view. Cost targets often limit the technology that is used to process digital images. Thus, engineers must develop excellent and efficient algorithms while minimizing the number of resources consumed. While all digital image processing applications begin with illumination, it is crucial to understand that if the lighting is poor, the software will not be able to recover the lost information. That’s why it is best to use a professional for these applications. A good assembly language programmer should be able to handle high-performance digital image processing applications. Images are captured in a two-dimensional space, so a digital image processing system will be able to analyze that data. The system will then analyze it using different algorithms to generate output images. There are four basic steps in digital image processing. The first step is image acquisition, and the second step is enhancing and restoring the image. The final step is to transform the image into a color image. Once this process is complete, the image will be converted into a digital file. Thresholding is a widely-used image segmentation process. This method is often used to segment an image into a foreground and an object. To do this, a threshold value is computed above or below the pixels of the object. The threshold value is usually fixed, but in many cases, it can be best computed from the image statistics and neighbourhood operations. Thresholding produces a binary image that represents black and white only, with no shades of Gray in between. Digital image processing involves different methods, which are as follows:Image Editing: It means changing/altering digital images using graphic software tools.Image Restoration: It means processing a corrupt image and taking out a clean original image to regain the lost information.Independent Component Analysis: It separates various signals computationally into additive subcomponents.Anisotropic Diffusion: This method reduces image noise without having to remove essential portions of the image.Linear Filtering: Another digital image processing method is about processing time-varying input signals and generating output signals.Neural Networks: Neural networks

Read MoreImage Processing Algorithms based on usage

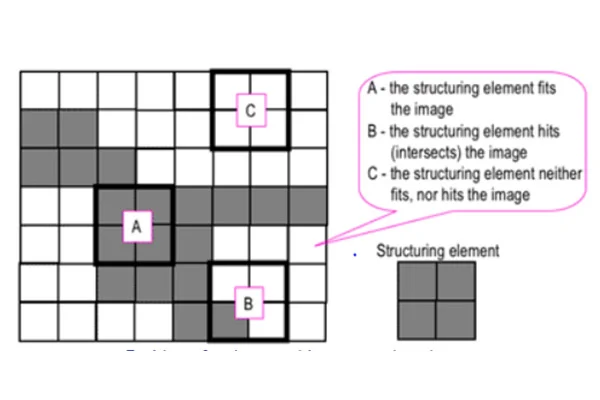

There are many ways to process an image, but they all follow a similar pattern. First, an image’s red, green, and blue intensities are extracted. A new pixel is created from these intensities and inserted into a new, empty image at the exact location as the original. In addition, grayscale pixels are created by averaging the intensities of all pixels. Afterward, they can be converted to black or white using a threshold. Edge Detection The first thing to note about Canny edge detectors is that they are not substitutes for the human eye. The Canny operator is used to detect edges in different image processing algorithms. This edge detector uses a threshold value of 80. Its original version performs double thresholding and edge tracking through hysteresis. During double thresholding, the edges are classified as strong and weak. Strong edges have a higher gradient value than the high threshold while weak edges fall between the two thresholds. The next phase of this algorithm involves searching for all connected components and selecting the final edge based on the presence of at least one strong edge pixel. Another improvement to the Canny edge detector is its architecture and computational efficiency. The distributed Canny edge detector algorithm proposes a new block-adaptive threshold selection procedure that exploits local image characteristics. The resulting image will be faster than the CPU implementation. The algorithm is more robust to block size changes, which allows it to support any size image. A new implementation of the distributed Canny edge detector has been developed for FPGA-based systems. Object localization The performance of different image processing algorithms for object localization depends on the accuracy of the recognition. While the HOG and SIFT methods use the same dataset, region-based algorithms improve the detection accuracy by more than twofold. The region-based algorithms use a reference marker to enhance matching and edge detection. They use the accurate coordinates in the image sequence to fine-tune the localization process. A geometry-based recognition method eliminates false targets, improves precision, and provides robustness. The ground test platform is already established and has improved object localization. It can now detect an object with one-tenth of pixel precision. This embedded platform can process an image sequence at 70 frames per second. These works were conducted to make the vision-based system more applicable in dynamic environments. However, the subpixel edge detection method is quite time-consuming and should only be used for fine operations. Among the popular object detection methods, the Histogram of Oriented Gradients (HOG) was the first algorithm developed. However, it is time-consuming and inefficient when applied to tight spaces. HOG is recommended to be the first method when working in general environments but is ineffective for tight spaces. However, it has decent accuracy for pedestrian detection due to its smooth edges. In addition to general applications, HOG is also suitable for object detection in video games. YOLO is a popular object detection algorithm and model. It was first introduced in 2016, followed by versions v2 and v3. However, it was not upgraded during 2018 and 2019. Three quick releases of YOLO followed in 2020. YOLO v4 and PP-YOLO were released. These versions can identify objects without using pre-processed images. The speed of these methods makes them popular. Segmentation There are various image processing algorithms available for segmentation. These algorithms use the features of the input image to divide the image into regions. A region is a group of pixels with similar properties. Usually, these algorithms use a seed point to start the segmentation process. The seed point may be a small area of the image or a larger region. Once this segmentation is complete, the algorithm adds or removes pixels around it until it merges with other regions. Discontinuous local features are used to detect edges, which define the boundaries of objects. They work well when the image has few edges and good contrast but are inefficient when the objects are too small. Homogeneous clustering is another method that divides pixels into clusters. It is best suited for small image datasets but may not work well if the clusters are irregular. Some methods use the histogram to segment objects. In other techniques, pixels may be grouped according to common characteristics, such as the intensity of color or shape. These methods are not limited to color and may use gradient magnitude to classify objects. Some of these algorithms also use local minima as a segmentation boundary. Moreover, they have based on image preprocessing techniques, and many of them use parallel edge detection. There are three main image segmentation algorithms: spatial domain differential operator, affine transform, and inverse-convolution. A popular implementation of image segmentation is edge-based. It focuses on the edges of different objects in an image, making it easier to find features of these objects. Since edges contain a large amount of information, this technique reduces the size of an image, making it easier to analyze. This method also identifies edges with greater accuracy. The results of both of these methods are highly comparable, although the latter is the more complex approach. Context navigation Current navigation systems use multi-sensor data to improve localization accuracy. Context navigation will enhance the accuracy of location estimates by anticipating the degradation of sensor signals. While context detection is the future of navigation, it is not yet widely adopted in the automotive industry. While most vision-based context indicators deal with place recognition and image segmentation, only a few are dedicated to context-aware navigation. For example, a vehicle in motion can provide information about its surroundings, such as signal quality. However, this information is not widely used in general navigation. Only a few works have focused on context-aware multi-sensor fusion. In addition to addressing these challenges, future research should identify and analyze the best algorithm for a particular situation. To detect environmental contexts, multi-sensor solutions are needed. GNSS-based solutions can only detect the context of one area, and the underlying data is not reliable enough to extract every context of interest. Other data types, such as vision-based context indicators, are needed for

Read MoreVision Based Inspection

Table of content Introduction Evolution of Vision-Based Inspection Benefits of Vision-Based Inspection in Manufacturing Applications of Vision-Based Inspection in Manufacturing Challenges and Considerations Overcoming Resistance to Automation The Future of Manufacturing and Vision-Based Inspection Conclusion Introduction Quality control is essential in the quick-paced manufacturing industry for maintaining product uniformity and customer satisfaction. In the past, human inspectors have been used to find flaws and guarantee product integrity. On the other hand, a vision-based inspection system has become a game-changer in the manufacturing sector due to the development of modern technology. These systems are revolutionising quality control by improving accuracy, efficiency, and productivity using artificial intelligence (AI) and machine vision. The future of manufacturing is examined in this article, focusing on the importance of enterprises adopting automation. Evolution of Vision-Based Inspection Improvements have greatly influenced the development of vision-based inspection in processing power, AI algorithms, and high-resolution cameras. These systems were first restricted to basic operations like barcode reading and presence detection. Benefits of Vision-Based Inspection in Manufacturing KBE systems depend heavily on artificial intelligence, especially machine learning, which enables them to learn from data, spot patterns, and make wise decisions. Here are a few ways that AI and machine learning, through KBE, are revolutionising engineering design: Human inspectors are prone to mistakes and weariness, resulting in inconsistent fault identification and upholding quality standards. Conversely, vision-based inspection technologies provide unmatched accuracy and consistency in spotting even the smallest discrepancies. These systems are trained to identify particular patterns, characteristics, or flaws, ensuring the inspection process is impartial and trustworthy. Human inspectors’ time-consuming and repetitive responsibilities are eliminated by automation using vision-based inspection. The devices can fast process Large volumes of products, and instantaneous inspection findings can be obtained. This higher productivity, shortened lead times, and eventually cost savings for manufacturers result from increased speed and efficiency. By requiring less labour, vision-based inspection solutions assist with the expenses associated with manual inspections. Additionally, they make it possible for producers to find and fix problems at an earlier production stage by enabling early defect identification. These methods reduce scrap, rework, and customer returns, which cuts waste and improves overall operating efficiency. Applications of Vision-Based Inspection in Manufacturing Utilise Knowledge-Based Engineering (KBE)’s (amazing) AI capabilities to accelerate your engineering design process. Bid adieu to manual labour and welcome greater productivity, exactitude, cost savings, and a spurt of invention. Let’s explore the fascinating advantages that AI offers KBE: AI systems can precisely analyse massive amounts of data, which lowers the likelihood of human error. This reduces the possibility of expensive design faults and results in more detailed designs. Vision-based inspection systems can validate proper component alignment and positioning in intricate manufacturing lines. To ensure exact assembly and lower the possibility of defective or out- of-place items, they can compare acquired photos against predetermined templates. Early detection of faults allows producers to avoid problems later on and enhance overall product quality. Alphanumeric characters like serial numbers, labels, or codes can be read and verified using vision-based inspection systems with OCR capabilities. This technology makes Effective traceability possible throughout the supply chain and industrial processes. OCR-based inspections improve regulatory compliance, eliminate counterfeiting, and ease inventory management. Challenges and Considerations Although vision-based inspection systems have several benefits for production, they are difficult to implement. To achieve successful integration and ideal results, these elements must be addressed. Let’s examine the difficulties and vital elements to consider while implementing vision-based manufacturing inspection. Careful integration with the current manufacturing processes is necessary before using vision-based inspection systems. Compatibility with communication protocols, software, and hardware must be considered to ensure smooth operation. Manufacturers must choose simple solutions to incorporate into their current infrastructure and carefully plan the deployment process. Vision-based inspection systems generate Massive volumes of data from photos and videos. This data must be managed and analysed effectively for meaningful insights to be obtained and production processes optimised. Manufacturers should invest significantly in reliable data management systems and use data analytics technologies to extract useful information from inspection data. Vision-based inspection systems need frequent maintenance and training to operate at their best. A wide variety of product samples and defect types must be used to train the AI algorithms to identify and classify defects accurately. Additionally, producers must set up maintenance procedures to guarantee the systems’ dependability and endurance. Overcoming Resistance to Automation Although there is no denying the advantages of vision-based inspection systems, some manufacturers could be reluctant to adopt automation due to worries about job loss and up-front expenditures. It is crucial to understand that automation does not always imply the replacement of human labour. Instead, it enables them to concentrate on higher-value duties like inspecting inspection data, streamlining processes, and enhancing quality. Furthermore, long-term cost savings and increased productivity can benefit more than the initial investment in vision-based inspection equipment. When weighing the deployment of these technologies, manufacturers should consider the return on investment (ROI) and potential competitive advantages. The Future of Manufacturing and Vision-Based Inspection Automation is the key to the success of manufacturing in the future, and vision-based inspection is leading this change. These systems will grow more potent, precise, and adaptable as technology develops. The effectiveness and capacities of vision-based inspection in manufacturing will be further improved by integration with other developing technologies, including robotics, the Internet of Things (IoT), and augmented reality. Vision-based inspection technologies will maintain product quality and reduce environmental impact as the industry prioritises sustainability and waste reduction. Manufacturers may reduce waste and help create a more sustainable manufacturing ecosystem by identifying problems early in production. Conclusion The manufacturing sector is changing because vision-based inspection systems offer precise, effective, and reasonably priced quality control solutions. By embracing automation, manufacturers can obtain greater precision, increased efficiency, and lower costs. By utilising AI and machine vision technologies, businesses can streamline processes, enhance product quality, and gain a competitive edge in the global market. Ready to revolutionise your manufacturing processes with the vision-based inspection? Contact Prescient today to unlock the power of automation, accuracy, and efficiency in quality control.

Read MoreNeutral 3D CAD File Formats

Table of Content Features of 3D CAD file formats Different 3D CAD File Formats Various CAD file formats have been developed in recent years. If you’re seeking the most suitable 3D model file, you need to know about the different file formats available. There are many benefits and drawbacks to each format, and you should know which one is right for your needs. Upon completion, every CAD design/model is saved in a respective file format. A 3D file format stores information about 3D models in plain text or binary data. The 3D formats encode a model’s following characteristics: However, not every 3D CAD format stores each of the data mentioned above. Each software comes with its specific 3D file formats. The variety in CAD file formats pertains to many reasons such as cost, feature, etc. It is necessary for any two software to enable interchangeability/interoperability to make things work. Before we talk about that, let’s take a quick walk through the evolution of CAD file formats. Features of 3D CAD file formats Everything comes with its variety, and CAD modeling is no stranger. As the technology evolved, CAD modeling came up in different styles and formats. Three-dimensional model files are often stored in one of two formats: Native (.dwg) or Neutral (.dxf). Native files contain the most detail, while Neutral files skip elements and contain only a basic representation. While there are many CAD Neutral file formats, not all of them are created equal. Some file formats have exceptional capabilities or features, while others are less compatible. Some formats are standardized, while others are proprietary. Considering there are different file types, they will come with specific properties. Other file types allow CAD model viewing in different ways. Some CAD files are limited to only 2D viewing to show the end customer. But since we are talking about 3D CAD models, the following are the main features of 3D CAD file formats: Native or Neutral The two main types of file formats are – Native and Neutral. Native 3D CAD file formats: All CAD design software uses a proprietary file type. Native formats are those that are native to a specific CAD system. Generally, such file types can only be viewed using the same software it was created with. However, it won’t open in a completely different design program. Proprietary files could be used in intercompany tasks. By using native file formats, designers can ensure that their chosen components are compatible with their PLM software. Native files contain the most detailed representation of 3D CAD models. Some notable native 3D CAD file formats are AutoCAD, Parasolid, Inventor, NX, CATIA, Solidworks, etc. Neutral 3D CAD file formats: Neutral 3D CAD file formats are those that are not proprietary to a specific program or file format. These file formats are useful in transferring 3D data from one program to another but do not contain as much fine detail as native CAD files. Neutral files skip elements and include only a basic representation. Neutral CAD file formats ignore the elements that are unnecessary for the software to create the design. Since they are interoperable, they can be viewed on many programs. They are helpful for engineers and designers who need to communicate with other departments and share a single design. Moreover, Neutral formats are not as compatible with all 3D CAD software, and many users do not have the time or budget to learn each one. Neutral data come in handy if the document is distributed to end-users who don’t use CAD software. Precise or tessellated CAD designs are displayed in two ways, namely, precise or tessellated. The difference lies in the fact that the product viewed while designing looks quite different from the actual product in real life. It is particularly noticeable in the case of lines and edges that form the product shape. This differentiates between precise drawings versus tessellated drawings. CAD software uses precise lines and angles to complete complex manufacturing processes to create a product. Such specific instructions must be included in a file format to edit the actual drawing or change its design. The lines and edges are tessellated while displaying a CAD drawing for visual purposes. Type of assembly Multi-part designs present a complicated situation when choosing a file format. Depending on the type of file format, multi-part product design may be limited to one single file for the whole assembly. Alternatively, designers also opt for separate files for each component. Awareness of how a particular software will display a multi-part product or if it will display a multi-part product is essential. Parts Listings CAD designs generally come with a list of parts. Different formats have different ways of presenting this part list. Some file formats have a Bill of Materials (BOM), while others, called Flat Lists, show all parts one by one. A bill of material showcases a single part and all its positions in a drawing. The latter is better for presenting all parts of an assembly. Different 3D CAD File Formats If you plan on using the same tool for several different projects, make sure the CAD tool you’re using is compatible with the standardized formats. Otherwise, you could run into problems. Popular CAD file formats include DXF, DWF, STEP, and Solidworks. Neutral file formats are widely used nowadays as intermediate formats for converting between two proprietary formats to counter interoperability. Naturally, these two known examples of Neutral formats are STL (with a .STL extension) and COLLADA (with a .DAE extension). They are used to share models across CAD software. Neutral 3D CAD File Formats STL: STL, which stands for stereolithography, is the universal format for pure 3D information. It is used in 3D printers and is somewhat loved by CAM. STL denotes only the surface geometry of a 3D object without any representation of color, texture, or other common CAD model attributes. It is a common 3D printer file format. To optimize the file for 3D printing, you can use export settings and the Polygon Reduction tool. A stereolithography file format created by 3D Systems stores

Read More